Curious about how AI transforms simple text into stunning images? This comprehensive guide dives into the fascinating world of AI image generation, breaking down complex concepts into clear, digestible insights.

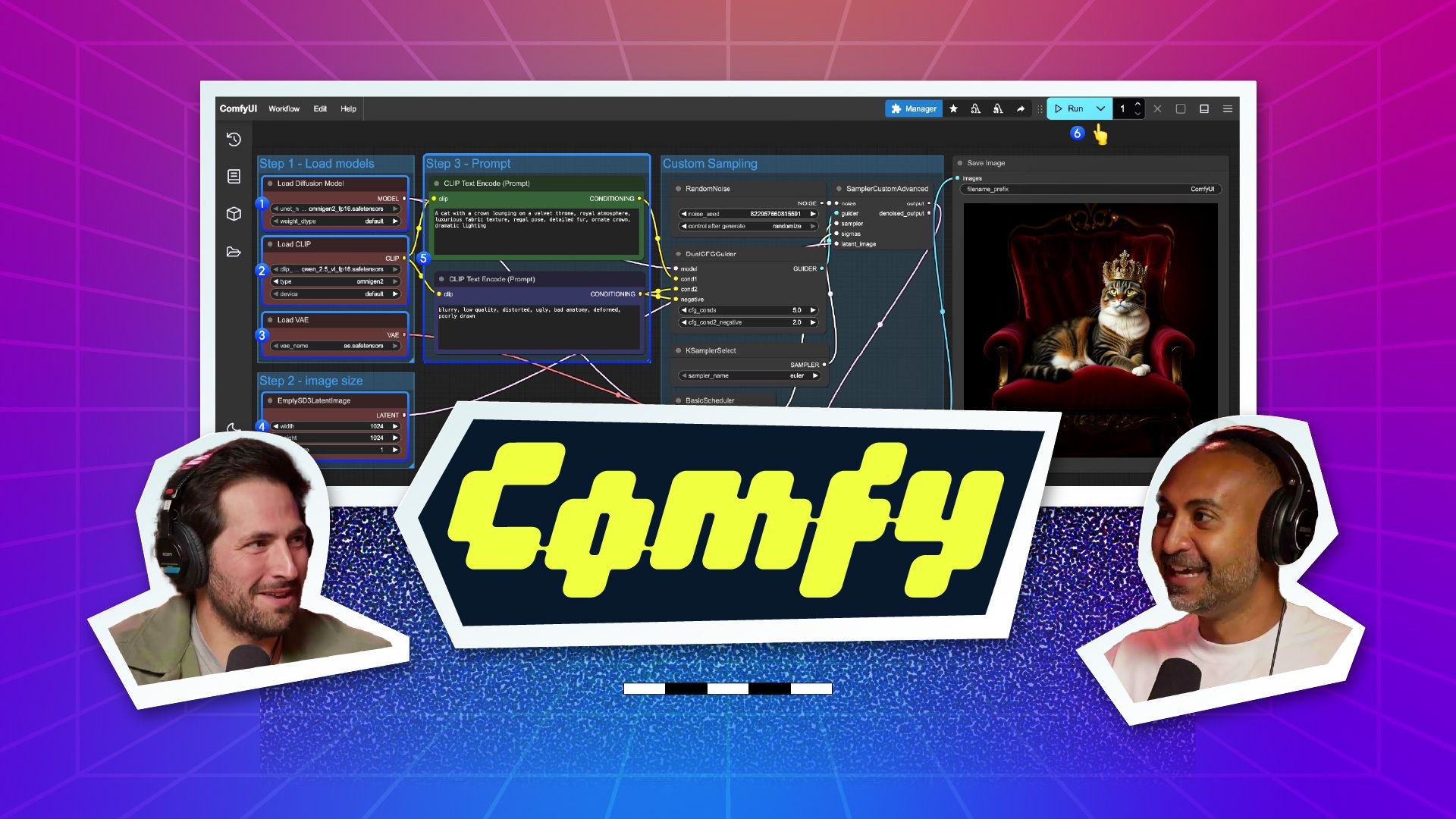

We explore the fundamentals of neural networks, latent space, and diffusion models, and then take a close look at ComfyUI — a powerful, open-source tool that offers creators unprecedented control over AI workflows.

Whether you're a beginner or an advanced user, learn how to harness ComfyUI for text-to-image generation, LoRA integration, image-to-image transformations, and even cloud API connections. Let’s unpack the magic behind the scenes and see how you can leverage these tools for your creative projects.

Understanding AI Image Generation and Neural Networks

At its core, AI image generation is powered by neural networks — sophisticated mathematical models inspired by the human brain. Unlike earlier AI methods, modern neural networks operate at an immense scale, with billions of nodes (simple mathematical functions like x * y + b) working together to recognize and generate complex concepts.

Imagine the concept of a "beach." Regardless of language — Mandarin, Tagalog, or English — a neural network associates this word with countless images of beaches worldwide, from Malibu to the South of France. This association stems from training on vast datasets of labeled images, enabling the model to understand not just what a beach looks like but also what it represents conceptually.

Training: From Noise to Recognizable Images

The training process is a marvel of machine learning. Models learn to reconstruct images from noise by repeatedly adding and then removing noise in incremental steps. This “denoising” teaches the neural network to distinguish between noise and meaningful image data.

For example, a clean image is gradually corrupted with noise, and the model learns to reverse this effect step-by-step, eventually generating clear images from random noise. This training is computationally expensive, often involving millions of images passing through the network thousands of times, guided by a loss function that helps the model self-correct and improve.

The Role of Latent Space

AI image generation happens in a mathematical realm called latent space, a multi-dimensional compressed representation of images and concepts. Unlike traditional image processing methods that work in pixel or frequency domains, latent space operates with vectors containing numerous coordinates that represent different features.

Think of latent space as a vast city with neighborhoods clustered by related concepts: one area might represent beaches and umbrellas (Santa Monica), another lamps and lighting fixtures (Echo Park). When you input a prompt like “a light on the sand at the beach,” the model navigates these neighborhoods to blend relevant features into a coherent image.

Summarizing the Training Cycle

Start with a high-quality, well-labeled image.

Incrementally add noise to the image, creating many noisy versions.

The model learns to predict and remove noise step-by-step, memorizing how to reconstruct the original image.

Once trained, image generation begins with random noise (seed), and the model progressively denoises it guided by your text prompt.

This process is the magic behind AI image generators like Stable Diffusion and Midjourney.

Getting Hands-On with ComfyUI

ComfyUI is a free, node-based AI image generation platform available for Windows, Mac, and Linux. Its visual workflow provides greater transparency and control compared to typical web-based tools. Initially, ComfyUI required technical installation via terminal or GitHub, but now it offers user-friendly installers for all major operating systems.

If you've used visual node-based software like Nuke, Fusion, Houdini, or Unreal Engine Blueprints, ComfyUI’s interface will feel intuitive. Each node represents a modular operation, and you can build complex AI workflows by connecting them.

Core Components of a ComfyUI Text-to-Image Workflow

Load Checkpoint: Loads the AI model (e.g., Stable Diffusion XL). Models come as safe tensor files (precompiled, uneditable) or diffusers files (modifiable). Stable Diffusion XL is popular and open source.

CLIP Text Encoders: Encode your positive and negative prompts into vectors that represent concepts in latent space. This encoding guides image generation.

Latent Image: An empty latent "canvas" at a specific resolution (commonly 512x512) where the image is generated. Using the model’s native resolution is important for quality.

KSampler: The heart of image generation, this node progressively denoises the latent image based on your prompts and model.

Seed: A random number that initializes the noise pattern. Using the same seed plus identical settings theoretically produces the same image, useful for consistency.

Steps: The number of denoising iterations. Typically 20-30 steps balance quality and computational cost.

Classifier-Free Guidance (CFG): Controls creativity. Higher CFG lets the model generate more freely, while lower CFG sticks closely to your prompt.

VAE Decode: Converts the latent image back to pixel space (JPEG/PNG) for viewing and saving.

Save Node: Automatically saves generated images locally, including batch processing capabilities.

Why Latent Space Matters

Latent space compresses vast amounts of image information into compact vectors. This compression allows models to be shipped efficiently (gigabytes instead of petabytes) while preserving the essence of billions of images. All mathematical operations in ComfyUI happen within this latent space.

Advanced Workflows: LoRAs and Image-to-Image

LoRA (Low-Rank Adaptation) Models

LoRAs are lightweight models (around 100MB) trained on specific styles, faces, or characters that attach to larger base models (20-30GB). Think of the base model as a massive cruise ship and the LoRA as a tugboat guiding it precisely where you want.

LoRAs don’t modify the main model but influence its output significantly. For example, you could train a LoRA on photos of a person named "Joey," then generate images of “a man wearing a suit” tagged with “Joey” to produce consistent character likenesses.

Training LoRAs is accessible via web services like Replicate, FluxGym, Automatic1111, or Civitai, often costing just a few dollars. While you can train LoRAs in ComfyUI, external tools tend to be easier.

Image-to-Image Workflow

Text-to-image generation is great for ideation, but professionals often want to modify existing images. Image-to-image workflows allow you to input a base image and guide its transformation with text prompts.

In ComfyUI, this involves loading an input image node, encoding it into latent space, and then using the KSampler with a noise setting that balances preserving the original structure and applying creative changes. For example, a noise value of 87 would heavily modify the input while keeping some foundational elements intact.

This workflow is ideal for refining framing, composition, or character placement, especially when combined with other tools like Unreal Engine or Blender for initial scene setup.

Cloud APIs and Hybrid Approaches

ComfyUI also supports API nodes that connect to popular cloud services like Runway, OpenAI, Google, and Stability AI. This hybrid approach enables users with limited hardware—such as MacBooks without Nvidia GPUs—to run complex workflows by leveraging cloud compute power.

Billing is straightforward: you connect your accounts, buy credits at face value, and the costs automatically deduct as you generate images. This integration allows batch generation and rapid iteration, while still saving outputs locally for easy access and management.

ComfyUI vs. Commercial Web Tools

With AI tools like ChatGPT, Runway References, and Flux Kontext becoming increasingly powerful and user-friendly, you might wonder: is ComfyUI still relevant?

The answer depends on your use case. For casual creators or social media content, web-based tools offer ease and speed. However, commercial creators who require high control, consistency, and customization often prefer ComfyUI for its flexibility and local processing capabilities.

LoRAs you build yourself allow direct influence over training data and captions, which is vital for brand work and character consistency. Image-to-image workflows with depth maps and edge detection provide granular control not yet matched by simpler platforms.

Moreover, ComfyUI users are typically 6 to 12 months ahead of commercial offerings in terms of cutting-edge features and control. Hybrid workflows combining ComfyUI with cloud services or web tools are becoming common, letting creators pick the best tool for each task.

Conclusion: Embracing the Future of AI Image Generation

AI image generation is evolving rapidly, and tools like ComfyUI empower creators to explore the technology at a deeper level. Whether you want to build custom LoRAs, experiment with image-to-image transformations, or integrate cloud APIs seamlessly, ComfyUI offers a robust platform for innovation.

As commercial tools catch up, the landscape will continue to shift. But for those seeking maximum creative control and flexibility, ComfyUI remains a universal and indispensable tool in the AI creator’s toolkit.