Michael Keaton talking to an AI hologram of his late mother: that image is the hook. In this episode of Denoised, hosts Addy and Joey walk through three stories creatives should be tracking right now — Google’s AI-backed short film Sweetwater, a startup planning to produce thousands of AI-generated podcasts every week, and Meta’s new Ray-Ban display glasses.

Each story touches a different pressure point for filmmakers and content creators: creative adoption of AI, the economics of synthetic content at scale, and where wearable AR hardware sits for real-world production work.

Why Sweetwater matters: AI as character, not just VFX

Google’s short film Sweetwater, directed by Michael Keaton and written by his son Sean Douglas, is more than another studio demo. What caught Addy and Joey’s attention is how the film integrates AI into the story world — explicitly using an AI-powered holographic avatar of a late character rather than hiding AI behind subtle VFX tricks.

For filmmakers this is a useful signal: the path to adoption often starts with a creative, risk-managed use case. Instead of replacing actors or hiding synthetic models in the background, Sweetwater treats AI as an on-screen element — a narrative device. That approach sidesteps some ethical and visual uncanny-valley questions and positions AI tools as another storytelling technique.

Details about how Sweetwater was produced are thin. Google released a blog post covering the premiere and the festival plan, but didn’t publish a production breakdown. That lack of transparency prompts practical questions for producers: which tools were used, how much of the footage is AI-generated, and how were practical production costs handled when using advanced tooling like Google’s internal models or LED volume stages?

From a production perspective, new tech usually enters via shorts and festival runs for a reason. Shorts provide a lower-cost, high-visibility sandbox: proven actors and established festival circuits give legitimacy while limiting financial risk. The hosts debated what a realistic short-film budget looks like if you’re using AI tools — estimates ranged widely. The takeaway: count on added line items for software, compute, and iterative passes when experimenting with AI on a narrative shoot.

Practical implications for filmmakers

Start small. Use AI where it enhances story beats (AI characters, interactive holograms, localized dialogue) rather than trying to render entire scenes from scratch.

Document your pipeline. If you use model-generated assets, note versions, prompts, and datasets so you can reproduce and audit creative decisions later.

Budget for iteration. AI tools evolve quickly — expect multiple passes and potential rework as models improve during production.

OpenAI, Critterz, and the shifting cost of AI production

Between the Sweetwater conversation and other projects, Addy and Joey flagged OpenAI’s involvement in feature-length or near-feature projects — some budgets reported around $30 million. That number prompted a comparison: yes, $30M is far below a major studio VFX tentpole, but it’s still substantial. The hosts note that early AI-assisted projects may actually cost more, not less, because teams are doubling down: generating, evaluating, and often redoing sequences as tools and model capabilities change.

For production planners, this means the idea that AI immediately makes content cheaper is incomplete. Savings can materialize in specific domains (previs, concept art, or automated editing), but you’ll also face costs for compute, specialized personnel, and QA. OpenAI and other nontraditional media companies are still building production workflows and infrastructure; expect overheads while those systems mature.

The intelligence plateau, GPUs, and the future of model architecture

A longer technical segment in the episode centered on model scaling and infrastructure. Addy and Joey referenced recent commentary suggesting large transformer models may be approaching diminishing returns when simply scaled bigger. The argument: training ever-larger models is costly and may yield smaller gains over time, prompting research into alternative architectures such as distributed “hierarchies” of specialized smaller models.

Why this matters to the industry: NVIDIA and other hardware suppliers have wagered heavily on continued demand for massive GPU farms and data centers. If the research community pivots away from enormous, monolithic models to more efficient architectures or distillation methods, the compute curve could change. For studios, VFX houses, and AI startups, that affects the economics of on-demand rendering, cloud-based generation, and long-term operational costs.

Practical takeaway: build flexibility into AI workflows. Use modular tooling and abstraction layers that let you switch model vendors or deploy distilled models on cheaper hardware when available.

AI beyond media: where compute is actually going

Image and video generation get a lot of headlines, but the hosts highlighted that many enterprise AI workloads — finance, legal, real estate, insurance — are likely to dominate data center utilization. Each industry has repetitive, high-volume API call patterns that scale quickly and can be more cost-stable than one-off creative generation bursts. For production-minded teams, that means cloud capacity and compute pricing will be influenced by enterprise demand as much as creative tools.

Inception Point AI: 5,000 podcasts a week — a volume play

Next up: a startup called Inception Point AI, run by former Wondery execs, says it already produces thousands of AI-generated podcast episodes across thousands of shows — and can produce an episode for about $1. The Hollywood Reporter profile sparked an animated conversation on several fronts.

At surface level this is an advertising and SEO play. Create huge quantity, insert dynamic ads, monetize at scale. Economically, a single episode only needs a few dozen listeners to break even if production cost is a dollar. But for creatives who have built audiences the old-fashioned way, the idea of filling the feed with low-effort AI-generated content raises concerns: increased noise floor, reduced discoverability, and the long-term value of brand-led storytelling.

There are also qualitative concerns about synthetic training data. The hosts discussed the so-called “dead internet” idea — if vast swathes of web content become synthetic, future models will train on synthetic outputs and risk spiraling in quality. Practically, teams working with datasets should label synthetic versus human-origin content, and exercise caution when ingesting large, unvetted sources.

What this means for podcast producers and narrative teams

Protect your IP and brand. Listeners still value authenticity and hosts who build trust over time.

Differentiate on craft. Music, sound design, host chemistry, and editorial rigor remain differentiation points that algorithms struggle to replicate at scale.

Consider hybrid models. AI can speed research, show prep, and rough edits — but human oversight keeps quality high.

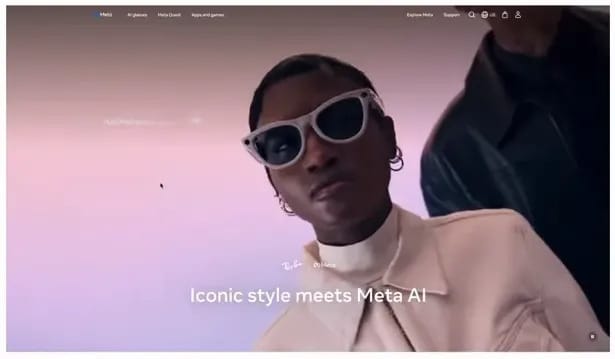

Meta Ray-Ban Display Glasses: a useful first step for AR on set

The episode closed on hardware: Meta’s new Ray-Ban Display glasses. These are display-enabled Ray-Bans with a tiny projector visible to the wearer, apparently projecting into the right eye at roughly 600×600 pixels. The form factor is light but chunky compared to regular sunglasses, and the device focuses on a daily-assistant use case: notifications, maps, quick translations, and audio through on-frame speakers.

For filmmakers and on-set crews, the hosts identified a few interesting use cases:

Virtual location scouting and director viewfinder — imagine a lightweight POV tool for previsualization when you don’t want to carry a full monitor package.

Hands-free information for above-the-line personnel — call sheets, schedules, and notifications without pulling phones in the middle of a take.

On-set audio replacement — bone-conduction or open-ear audio can reduce the need for separate earpieces in some scenarios.

Limitations are clear: the display is single-eye and low resolution for fine visual tasks like pull-focus, and current versions lack spatial mapping and robust SLAM (simultaneous localization and mapping). That means AR overlays “stuck” to the world aren’t reliable yet. Still, this iteration feels closer to delivering the Google Glass vision in a practical package.

Where Apple fits in

Both hosts noted Apple’s advantage: hardware, wearables, and a powerful handset to provide backing compute and ecosystem integration. If Apple moves aggressively into wearable AR, its vertical stack and accessory integration (watch, phone, glass-like headset) would be a serious competitor — but Meta’s product shows the market is moving toward functional, daily-wear AR devices now, not years from now.

Three practical takeaways for filmmakers and media pros

Treat AI like a new tool in the kit — integrate it where it enhances story or efficiency, not as an automatic cost cutter. Test on shorts and proof-of-concepts before scaling to features.

Audit your data and pipelines — know what models, datasets, and synthetic inputs you’re using. Label synthetic content and keep provenance metadata for creative and legal reasons.

Design for change — build modular pipelines that can swap models or move compute between cloud and on-prem as economics and architectures evolve.

These stories are snapshots of a transition period. Sweetwater suggests legitimate creative uses for on-screen AI characters. Inception Point AI highlights the economic pressure of synthetic content at scale. Meta’s Ray-Bans show hardware catching up to practical use cases. For producers, VFX supervisors, and storytellers, the common thread is pragmatic experimentation: try the tools, measure costs and quality, and design systems that keep human craft at the center.