Welcome to VP Land! The AI creative stack just got a major upgrade, with significant releases from Google, Meta, and WorldLabs.

In today's edition:

Nano Banana Pro

Meta's SAM 3D converts photos to 3D

WorldLabs Marble builds interactive 3D worlds

AI at AFM

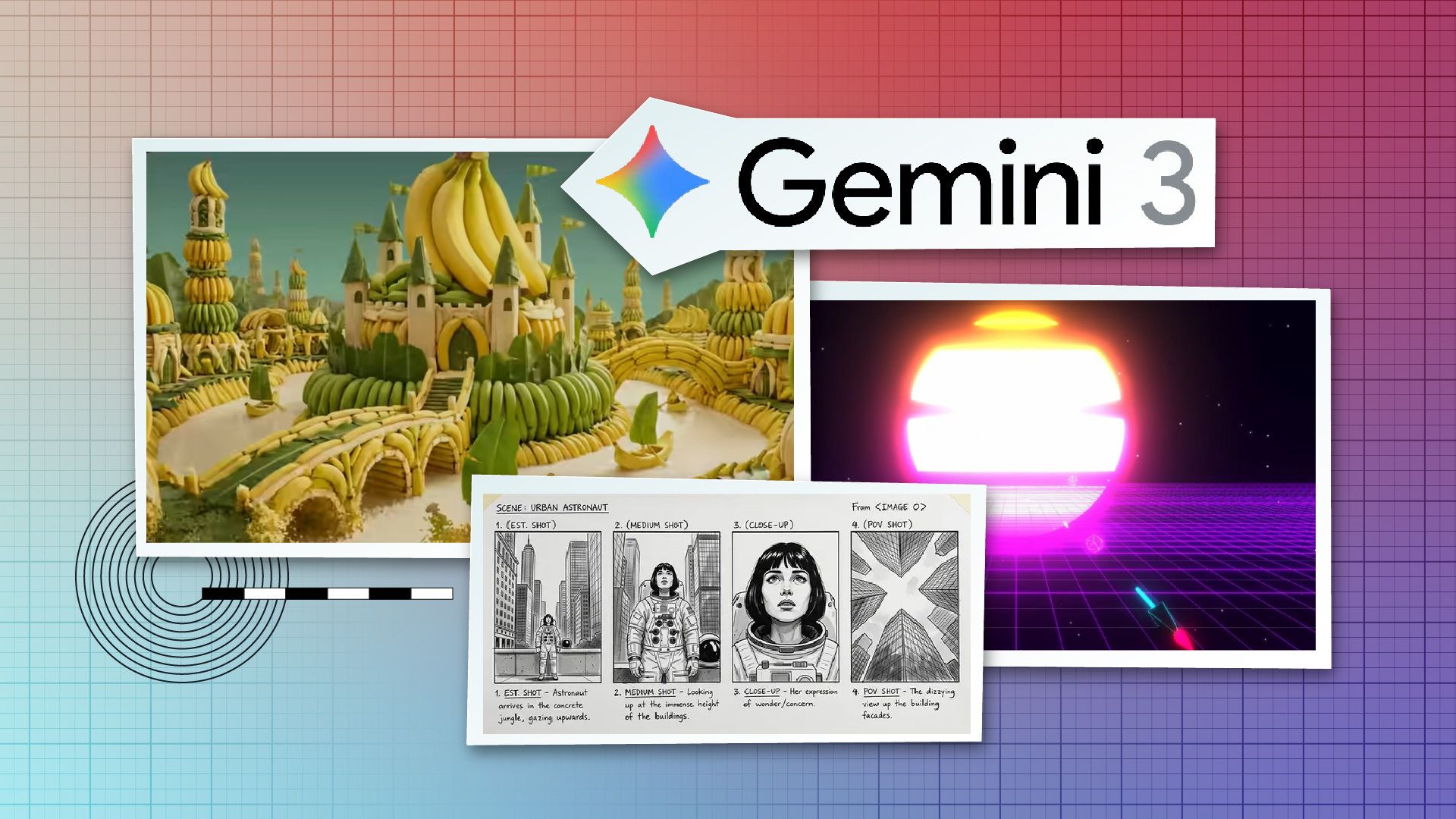

Nano Banana Pro Brings 4K & Real-World Knowledge

Google launched Nano Banana Pro, an upgrade to their popular model with major improvements in text rendering, composition control, and studio-quality editing.

What's improved from the original Nano Banana:

Better text rendering - Nano Banana Pro can generate images with "correctly rendered and legible text directly in the image, whether you're looking for a short tagline, or a long paragraph." The model handles multilingual text generation and translation, making it useful for storyboards, mockups, and international campaigns.

Advanced composition control - Supports blending up to 14 images while maintaining consistency and resemblance of up to 5 people. The model can turn sketches into photorealistic products, apply brand styles across mockups, and create complex lifestyle scenes from multiple input elements.

Studio-quality editing controls - Includes localized editing with the ability to adjust camera angles, change focus, apply color grading, and transform lighting (day to night, bokeh effects). Outputs at 2K and 4K resolution with multiple aspect ratios.

Real-world knowledge integration - Connects to Google Search to generate contextually accurate infographics, educational explainers, recipe visualizations, and real-time information like weather or sports data.

Availability: Free-tier users get limited quotas before reverting to the original Nano Banana. Google AI Plus, Pro, and Ultra subscribers get higher limits. Available in Google Ads, Workspace (Slides, Vids), NotebookLM, and Flow for Ultra subscribers. Developers can access it through Gemini API, Google AI Studio, and Vertex AI.

Freepik is running unlimited Nano Banana Pro generations for a week. The model is also available as a node in ComfyUI.

All generated images include SynthID, Google's imperceptible digital watermark for content verification. Free and Pro tier users see a visible Gemini sparkle watermark, but it's removed for Ultra subscribers and Google AI Studio users.

Pricing: The original Nano Banana cost $0.039 per image. Nano Banana Pro is priced at $0.30 per image for 4K resolution—nearly 8x the cost for higher-resolution output.

Google also released Gemini 3, the underlying foundation model powering Nano Banana Pro's enhanced reasoning, world knowledge, and multilingual capabilities. The new model brings improvements in advanced reasoning and real-time information grounding across Google's AI products.

SPONSOR MESSAGE

One-Click Video Blog Generation Tool

VideoToBlog.ai uses advanced AI to instantly transform your video content into SEO-optimized blog posts, complete with automated visuals and multi-language support. The platform handles everything from transcription to publishing, letting creators scale their written content without the usual time investment.

The platform automatically converts any video or audio file into structured, readable blog posts using speech recognition and natural language processing.

You can customize the AI output with specific prompts to match your brand voice, tone, and style preferences for consistent messaging.

Visual content gets handled automatically with screenshot extraction from key video moments, plus options for stock images and AI-generated graphics.

YouTube integration detects new uploads and converts them into draft or published blog posts completely hands-off.

Multi-language support lets you repurpose content for global audiences without translation overhead, while built-in SEO optimization boosts search visibility.

For video creators drowning in content repurposing work, VideoToBlog.ai eliminates the hours typically spent on manual transcription, editing, and formatting. Starting at $9/month, it transforms your existing video library into a search-friendly content engine that works while you focus on creating. Try it now!

Meta's SAM 3D Converts Photos to Editable 3D Models

Meta launched SAM 3D, a suite of AI models that converts 2D images into detailed 3D reconstructions—objects, scenes, and human bodies from single photos.

Key Details:

From photos to explorable 3D - Upload a single image, select objects or people, and generate posed 3D models you can manipulate or view from different angles. Meta's calling this "grounded 3D reconstruction" for physical-world scenarios, not just synthetic or staged studio shots.

5:1 win rate vs. competitors - In head-to-head human preference tests, SAM 3D Objects beat existing methods by at least a 5:1 margin. The system reconstructs full textured meshes in seconds, fast enough for real-time robotics or VFX workflows.

Segment Anything Playground live - Public demo environment where anyone can upload images and try the models. Upload your own photos, reconstruct humans or objects, export the results.

Training data advantage - SAM 3D Objects was trained on almost 1 million physical-world images with ~3.14 million model-in-the-loop meshes. SAM 3D Body used approximately 8 million images, including rare poses and occlusions. This is vastly more diverse than the isolated synthetic 3D assets most models train on.

Already in production - Facebook Marketplace's new "View in Room" feature uses SAM 3D to let buyers visualize furniture in their homes before purchase.

The trade-offs: Resolution is moderate—complex objects like full human reconstructions can lose detail. The model predicts objects individually, so it doesn't reason about physical interactions between multiple objects in a scene. Hand pose estimation works but doesn't beat specialized hand-only models.

For VFX, virtual production, or previsualization teams, the value is in speed and accessibility. This isn't photogrammetry-level fidelity, but it's a practical path from reference photos to manipulable 3D assets without scanning rigs or modeling labor. Meta's sharing everything—code, benchmarks, MHR format—which should accelerate research and tooling around physical-world 3D capture.

Silicon Valley's AI Fantasy vs. Hollywood's Reality at AFM

The AI discussions at AFM made one thing clear: Silicon Valley and Hollywood aren't even asking the same questions, with tech companies pitching automation fantasies while producers tallied costs, copyright risks, and disappearing career ladders.

Here's a recap of what we heard and saw at the various AI panels:

"Hollywood x AI: Why Business Models Matter More Than Technology" - Discussions centered on whether AI tools can fit into existing production economics rather than technical capabilities, with participants emphasizing that impressive features mean nothing without workable revenue models and cost structures that make sense for productions

"How Platform Builders See AI Adoption in Film" - Technology companies presented automation visions that panelists noted often miss production realities, with the "AI James Bond" concept highlighting a disconnect between Silicon Valley's expectations and how filmmaking actually works on set

"What Producers and Execs See with AI" - Executives focused on three areas: potential 10x cost reductions in specific production tasks, unresolved copyright questions around training data and content ownership, and the elimination of entry-level positions that have traditionally served as pathways into the industry

We published full recaps of all three panels, breaking down perspectives from platform developers, studio executives, and working producers on where AI actually fits in production workflows. Read the complete coverage: Platform Builders, Hollywood x AI, and Producers & Execs.

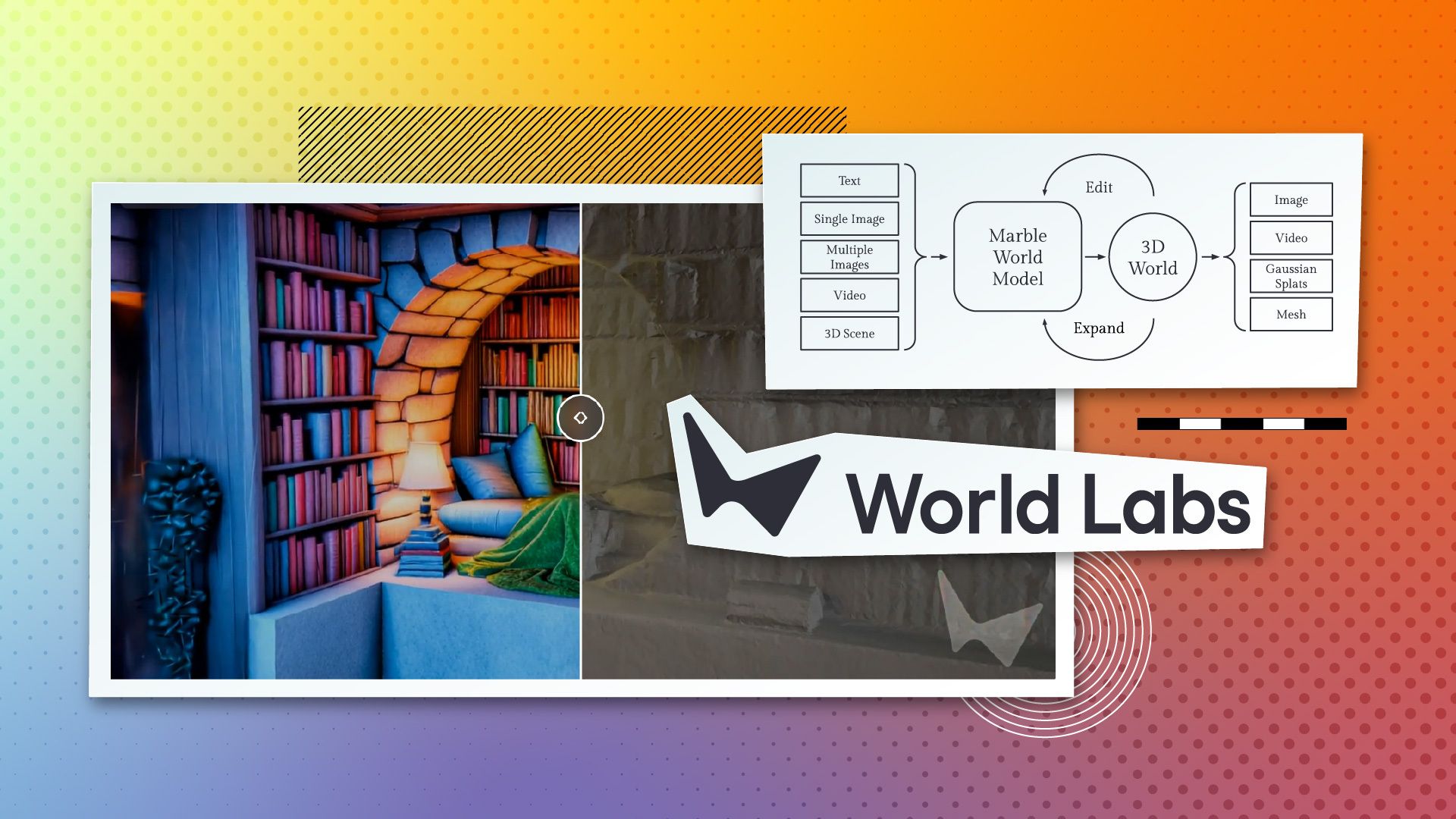

WorldLabs Launches Marble 3D World Generator

WorldLabs launched Marble, a multimodal generative world model that creates interactive 3D environments from text, images, video, or custom 3D layouts. After two months of private beta, the platform is now publicly available, offering creators a toolset for building, editing, and exporting 3D worlds for VFX, previsualization, game design, and simulation workflows.

Marble's core capability is generating full 3D worlds from multiple input types—text prompts, single or multiple images, video, or coarse 3D layouts—then letting you iteratively edit, expand, and export them in production-ready formats.

What you can do:

Generate from multiple inputs - Create 3D worlds from text, single images, multi-image sets, video clips, or basic 3D layouts. Multi-image prompting lets you control how generated worlds look from different angles, or combine photos of real locations into explorable 3D spaces.

Edit interactively - Remove objects, swap elements, change visual style, or restructure entire sections of generated worlds using AI-native editing tools. Edits can be small (touch up a corner) or drastic (turn a dining hall into a theater with seating).

Chisel mode for 3D sculpting - An experimental tool that separates structure from style. Lay out coarse 3D geometry using boxes, planes, or imported assets, then apply text prompts to generate detailed worlds that match your spatial layout while following the described aesthetic.

Export for production - Output as Gaussian splats (highest fidelity), triangle meshes (collider or high-quality), or pixel-accurate videos. Enhanced video export can clean artifacts and add dynamic motion like smoke, flames, or flowing water while maintaining camera control.

Expand and compose - Select regions of generated worlds to expand for more detail or scale, or compose multiple worlds into larger environments using Marble's composer mode.

WorldLabs also launched Marble Labs, a community hub with case studies, tutorials, and workflows covering applications in filmmaking, interactive content, robotics simulation, and design. The platform is live now at marble.worldlabs.ai.

For previsualization and virtual production teams, Marble's export options address the typical "last mile" problem with generative 3D—getting AI-generated content into actual pipelines. Gaussian splats work with their open-source Spark renderer integrated with THREE.js, while mesh exports fit standard VFX and game engine workflows. The ability to shoot precise camera moves through generated environments and export them as video could compress concept development timelines significantly.

The open question is how well these outputs hold up under production scrutiny—detail consistency, lighting control, and whether the editing tools provide enough precision for professional-grade work. WorldLabs positions this as a step toward "spatial intelligence" with future plans for interactivity that would let humans and agents interact with generated worlds in real time.

We put Blackmagic's camera-to-cloud workflow and Eddie AI to the test in the middle of the desert.

Stories, projects, and links that caught our attention from around the web:

🎬 Cosm and Warner Bros. are bringing Harry Potter to their 87-foot LED domes with Butterbeer and hidden easter eggs, tickets early 2026

🚀 AI startup Project Prometheus lands $6.2B with Jeff Bezos as co-CEO, targeting manufacturing in computing and aerospace

🛍️ The Disney Drop Shop is coming to Disney Springs this month, designed specifically for live‑streaming and unboxing collectible merchandise.

⚡ Jack Dorsey-funded Vine reboot diVine brings back six-second video format with 100K+ archive clips and an AI ban

✨ Disney confirms gen-AI UGC coming to Disney+ with IP protection promises

📽️ Arri launches Film Lab plugin with stock-specific grain, halation simulation, and on-set preview for Alexa 35

📐 Freepik launches Camera Angles to rotate views and shift perspectives while preserving detail

Addy and Joey dive into Comfy Cloud's $20/month browser-based workflow, Nano Banana 2 leak rumors, and Veo 3.1's new camera controls.

Read the show notes or watch the full episode.

Watch/Listen & Subscribe

👔 Open Job Posts

Virtual Production (VP) Supervisor/Specialist - FT

Public Strategies

Oklahoma City, OK

📆 Upcoming Events

April 18-22, 2026

NAB Show

Las Vegas, NV

July 15-18, 2026

AWE USA 2026

Long Beach, CA

View the full event calendar and submit your own events here.

Thanks for reading VP Land!

Thanks for reading VP Land!

Have a link to share or a story idea? Send it here.

Interested in reaching media industry professionals? Advertise with us.