AIsphere, the Alibaba-backed AI startup, released PixVerse R1, a real-time world model that generates 1080P video instantly as users interact with it. Unlike traditional AI video tools that produce fixed-length clips after processing delays, R1 creates continuous, interactive video streams that respond to text commands in the moment.

Key Takeaways:

Real-time 1080P generation responds instantly to user commands, enabling infinite-length video without predefined duration limits

World model architecture maintains physical consistency across extended sequences, preserving character identity, object properties, and environmental coherence

Three-part technical system combines a multimodal foundation model, autoregressive framework, and optimized inference engine to achieve near-zero latency

Limited access available now via invite codes at realtime.pixverse.ai, with broader rollout expected as infrastructure scales

Competitive positioning focuses on interactivity rather than photorealism, complementing tools like Runway and Kling rather than replacing them

What R1 Actually Does

PixVerse R1 shifts AI video from a render-and-wait workflow to something closer to a video game engine. Users describe what they want to see through text prompts, and the system generates continuous video that adapts in real-time. Tell it "run fast" and your character starts sprinting. Type "the room catches fire" and flames appear instantly.

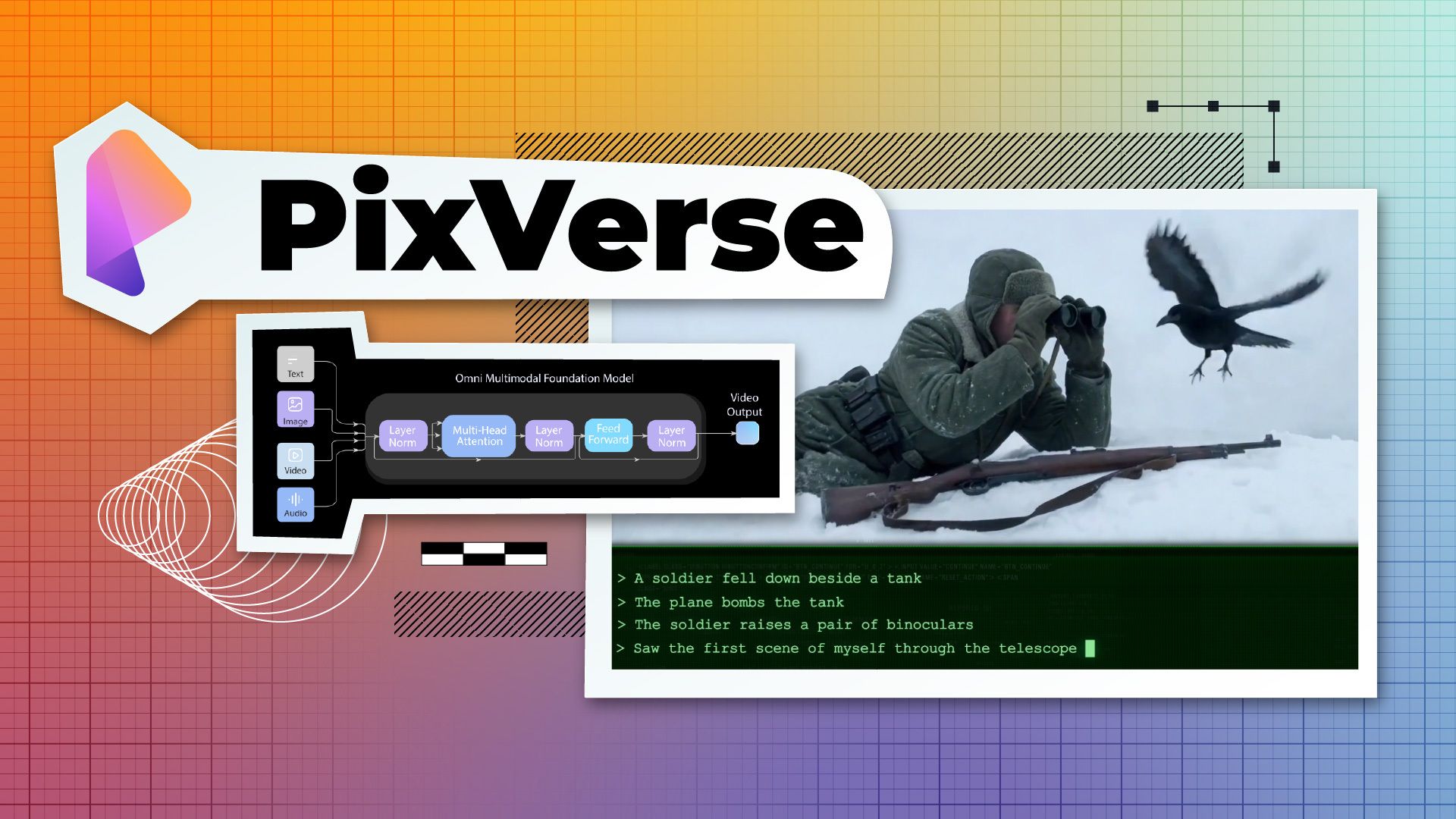

The system handles this through what PixVerse calls an "Omni Native Multimodal Foundation Model" that processes text, images, audio, and video through a unified architecture. Rather than passing data through separate processing stages, the model treats all inputs as a single token stream. This reduces the error accumulation that typically degrades output quality when chaining multiple AI systems together.

The autoregressive generation approach enables theoretically unlimited video length. The model predicts each frame based on what came before, maintaining a memory of scene state that prevents the consistency drift common in longer AI-generated sequences. Characters keep their faces. Objects stay where they should be. Environments remain coherent as scenes evolve.

The Technical Trade-offs

Achieving real-time performance required deliberate compromises. PixVerse reduced the typical diffusion sampling process from dozens of steps down to one to four steps through a technique called "temporal trajectory folding." This direct prediction approach trades some generation flexibility for the speed necessary for interactive use.

"A memory-augmented attention mechanism conditions the generation of the current frame on the latent representations of the preceding context, ensuring the world remains physically consistent over long horizons."

The physics simulation learns approximate behaviors from training data rather than implementing precise physical calculations. For perceptual realism in interactive applications, this works well. For scientific or engineering simulations requiring exact physics, specialized engines remain necessary.

Extended sequences still accumulate prediction errors over time. Minor inaccuracies compound across thousands of frames, potentially causing subtle consistency degradation in very long sessions. The memory mechanism mitigates this significantly, but users generating hour-long sequences may notice spatial drift in complex scenes.

How It Compares to Existing Tools

PixVerse R1 occupies different territory than established competitors. OpenAI's Sora generates photorealistic 60-second clips with exceptional detail, but requires processing time and produces fixed-duration output. Runway Gen 4 offers professional editing integration and motion control. Kling excels at cinematic camera work with native audio generation. Pika prioritizes speed and creative effects for social media content.

R1's value proposition centers on interactivity. None of the established platforms offer real-time, continuous generation that responds instantly to user input. This makes R1 less a replacement for existing tools and more a new category suited to different applications: interactive entertainment, responsive simulations, adaptive educational content, and live visualization scenarios.

For filmmakers and content creators, the immediate relevance depends on workflow. Projects requiring polished, fixed-duration clips may find Runway or Kling more practical. Applications demanding real-time responsiveness or extended continuous generation represent R1's sweet spot.

Pricing and Access

PixVerse maintains tiered subscription plans across its platform. The Essential plan runs $100/month with approximately 15,000 credits. The Scale plan costs $1,500/month with 239,230 credits. Credit consumption varies by resolution: 1080P five-second clips consume roughly 120 credits under standard settings.

R1 specifically requires invite codes during its current limited release. The company distributes access gradually while scaling infrastructure. Users can request access through realtime.pixverse.ai.

The broader PixVerse platform reached 100 million users by mid-2025 and generates over $40 million in annual recurring revenue. Alibaba led a $60 million Series B in late 2025, reflecting institutional confidence in the platform's direction.

What This Means for Media Production

Real-time world models represent an emerging capability rather than a mature production tool. The technology points toward futures where pre-visualization happens interactively, where game environments generate dynamically, and where educational content adapts to learner input without pre-rendering every possible branch.

For immediate production workflows, R1 functions best as an exploration and prototyping tool. The ability to iterate on visual concepts in real-time, testing different directions instantly, offers value in early creative phases. Final delivery still favors tools optimized for polished output rather than interactive generation.

The competitive response will likely accelerate development across the industry. OpenAI, Runway, and other major players possess the research capabilities to pursue similar real-time approaches. Whether R1 establishes a lasting lead or catalyzes industry-wide adoption of interactive generation remains to be seen.

What's Next

PixVerse plans broader R1 access as infrastructure supports increased demand. The preset world library will likely expand, and integration with professional workflows should develop as the platform matures. Creators interested in exploring the technology can request early access through the dedicated portal.

For media professionals evaluating AI video tools, R1 merits attention as a different approach to generation rather than an incremental improvement on existing methods. The shift from fixed clips to interactive worlds opens applications that previous tools couldn't address. Whether those applications align with your production needs determines whether R1 belongs in your toolkit now or remains one to watch as the technology develops.