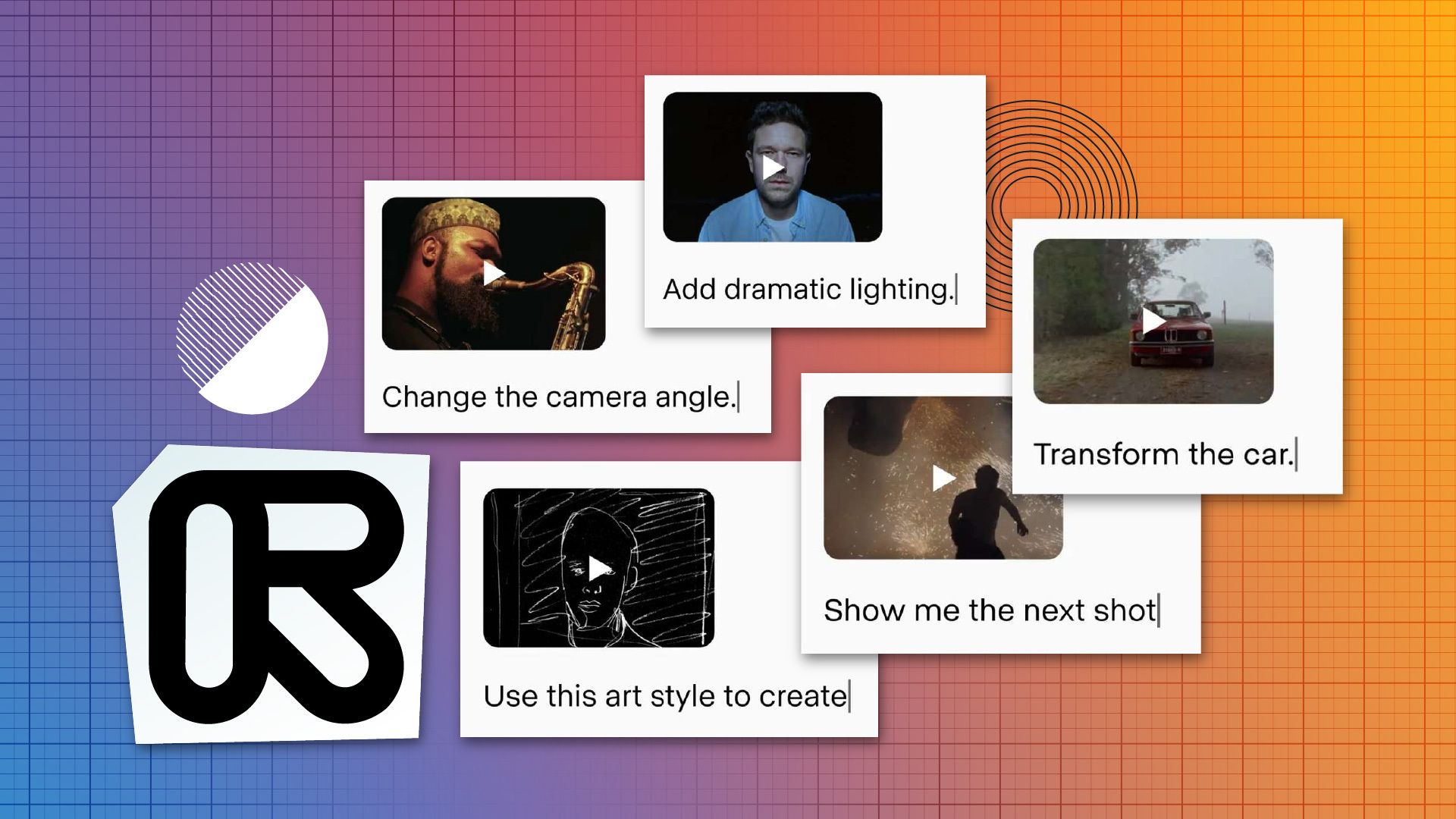

Runway has launched Aleph, an AI-powered video model that consolidates object manipulation, scene generation, and style modification into one unified system. The platform enables users to add, remove, or transform objects within existing video clips while generating new camera angles from existing footage.

Seemingly inspired by Jorge Luis Borges' short story, Aleph represents a shift from specialized AI tools to comprehensive video editing, allowing creators to perform multiple complex operations without switching between different applications or requiring extensive technical expertise.

Multi-Angle Magic: Aleph generates different perspectives of any scene, creating virtual camera moves and reconstructed viewpoints from source footage.

The technology addresses a longstanding challenge in post-production by offering what Runway calls "multi-task visual generation." Instead of using separate tools for object removal, style changes, and scene extensions, editors can perform these operations within a single contextual workflow.

Key capabilities include:

Object-level precision - Add or remove elements with temporal consistency across frames

Scene transformation - Generate new views or angles without additional filming

Style and lighting control - Modify visual aesthetics and lighting conditions

Web-based accessibility - No software downloads required, running entirely in browser

The official announcement positions Aleph as building upon Runway's previous Gen series, which includes Gen-4 Turbo's improved physics and faster generation speeds.

Behind the Lens: The model's contextual understanding allows for consistent multi-step edits that consider temporal and spatial relationships across video frames.

Aleph's technical foundation centers on what Runway describes as unified, contextual processing. Unlike previous "point solution" AI tools that handle single tasks, the system analyzes entire video contexts to maintain consistency across complex edits.

The platform demonstrates several technical advances:

3D video understanding - Synthesizes different camera angles and virtual cinematography

Advanced object tracking - Maintains temporal coherence when inserting or removing elements

Cinematic style interpretation - Replicates and blends lighting scenarios naturally into existing footage

Performance optimization - Faster processing compared to Runway's previous models

This approach streamlines workflows that previously required multiple specialized applications and expert knowledge of different software systems.

Production Pipeline: Studios and VFX houses can now address continuity errors, extend sets, and create multiple product variants without reshoots.

The platform's applications span several areas of media production, from large-scale studio work to independent content creation. Netflix has reportedly begun testing Runway's AI video suite in content workflows, indicating major industry interest in production automation.

Primary use cases include:

VFX and compositing - Fix continuity issues or extend environments post-production

Virtual cinematography - Create new shot angles after principal photography wraps

Advertising efficiency - Generate multiple campaign variants from single shoots

Global content adaptation - Modify backgrounds and elements for different markets

The browser-based approach removes traditional barriers like expensive hardware requirements or complex software installations, potentially expanding access to advanced video effects capabilities.

Final Cut: As AI video tools mature, Aleph signals a shift toward consolidating post-production workflows while potentially reshaping traditional VFX labor markets.

The introduction of comprehensive AI video editing platforms like Aleph suggests the industry is moving beyond experimental tools toward production-ready systems. This evolution could significantly impact how studios approach post-production budgeting and staffing decisions.

The implications extend beyond technical capabilities to fundamental questions about creative workflows, job roles in post-production, and the balance between AI automation and human creative input. As these tools become more accessible, the traditional boundaries between different production roles may continue to blur.

For media professionals, Aleph represents both opportunity and challenge - offering powerful new creative possibilities while requiring adaptation to rapidly evolving technological landscapes. The platform's broader release timeline and integration capabilities will likely determine its long-term impact on industry workflows and creative processes.