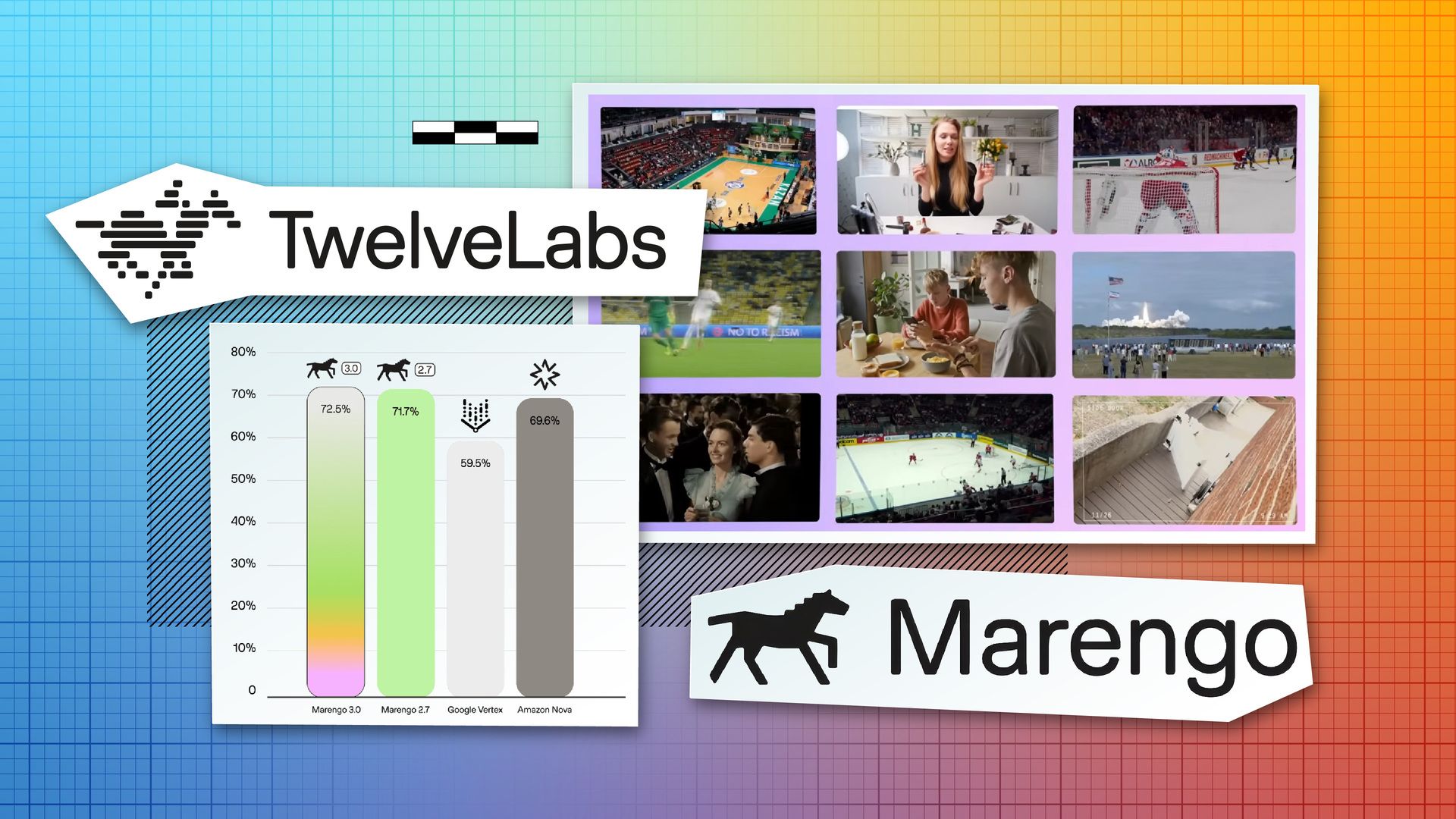

TwelveLabs launched Marengo 3.0, a video understanding model designed for production deployment rather than benchmark optimization. The company claims it's 6x more storage-efficient than Amazon Nova while processing video 30x faster.

Key Details

Speed advantage - Marengo 3.0 processes video at 0.05 seconds per second of content, compared to 0.5 seconds/second for Google Vertex and 1.5 seconds/second for Amazon Nova. For a 1,000-hour video library, that's the difference between 86 hours and 164 hours of compute time.

Composed retrieval - The model combines image and text inputs into a single query embedding, a capability competing models don't offer. Adding a single image to text-only search improved accuracy from 34.4% to 38.5% on their basketball action search benchmark.

Sports-specific training - On SoccerNet-Action, Marengo 3.0 hit 79.4% accuracy compared to 23.0% for Nova and 21.5% for Vertex—a 3.5x performance lead on fine-grained action recognition.

Efficiency design - Uses 512-dimension embeddings versus Amazon Nova's 3072 dimensions and Google Vertex's 1408 dimensions, directly reducing database costs and search latency.

Native multilingual support - Achieved state-of-the-art results in Korean (87.2% R@5) and matched best-in-class performance in Japanese (91.1%) on the Crossmodal 3600 benchmark.

The model's available now through AWS Bedrock for enterprise deployments and TwelveLabs' own Search API and Embed API with Python and Node.js SDKs. TwelveLabs plans to add hybrid semantic-lexical search and extended composed retrieval capabilities.