In this week’s fast-moving tech roundup, Addy and Joey unpack the tools and research reshaping filmmaking—from a $5K LiDAR camera and realtime AI video generator to on-device vision models and mini AI workstations—signaling a future where capture, creation, and AI inference converge both on set and on desktop.

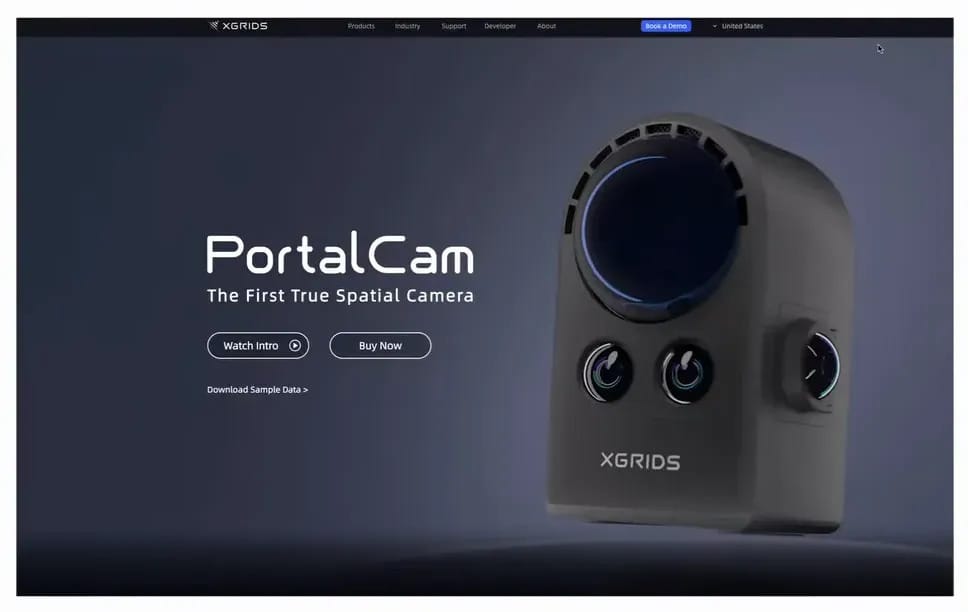

XGRIDS PortalCam: Spatial capture for $5,000

The standout product of the week is the PortalCam from XGRIDS. Traditionally, professional LiDAR and photogrammetry scanners have been priced from roughly $15K to well over $100K—equipment that’s designed for survey-grade range and accuracy rather than cinematic fidelity. PortalCam changes that calculus. Priced at $5,000, it targets filmmakers directly: a lighter scanner with a four‑camera array (two fisheye, two forward sensors) and SLAM tracking to create rich spatial captures.

Key practical points for production:

Speed vs. detail tradeoff: the device captures more geometry and texture the slower and closer an operator scans a surface—useful for dialing in high detail on props or set dressing.

Location scouting & measurements: teams can scan a location and later take accurate measurements, plan shots in virtual space, or share scans with remote crew for virtual scouting.

Integration & export: PortalCam’s software aims to bridge the gap for filmmakers not fluent in 3D tools, while also allowing export to Unreal, Blender, or RealityCapture workflows.

For VFX pipelines this is a bread‑and‑butter tool: accurate geometry and high‑resolution texture capture speed look development, matchmoving, and set augmentation. If PortalCam integrates with RealityCapture or exports optimized Gaussian splats, it becomes even more production-ready for LED walls or virtual production stages.

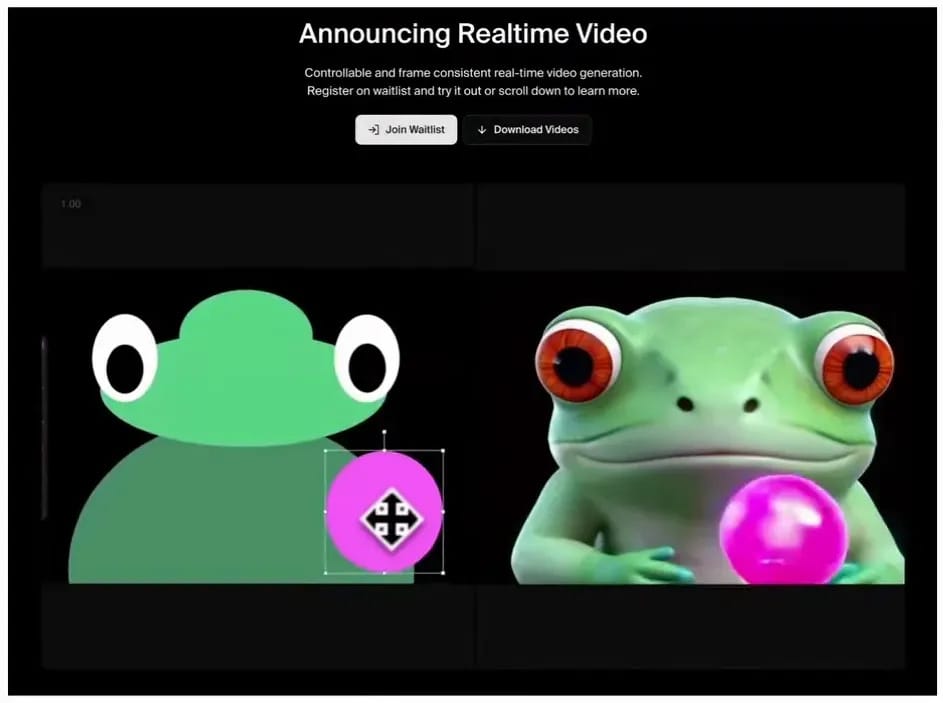

Krea Realtime Video: The coming fork between quality and immediacy

Krea teased a realtime video generator that preserves temporal consistency while letting users drive scenes with simple shapes and inputs. This is the interface that previously made waves for its on-the-fly image generation; the leap is that video now holds together frame-to-frame.

Why this is important:

Rapid ideation & previs: filmmakers can iterate camera blocking, framing and lighting ideas in seconds—valuable during pre‑production and early design passes.

Performance & puppeteering: artists could “play” a composition in real time—drive character movement or change background elements while recording the session.

Game design & interactive art: even if photorealism lags, a realtime renderer that accepts vector inputs can power 2D/experimental games and immersive performances.

Krea’s demo hints at both cloud and device constraints. If similar tools run locally at scale, games and interactive installation work will be immediate. If they remain cloud‑centric, latency and cost will restrict on‑the-spot creative iteration. Either way, this is a fork: high‑quality batch generation vs. lower‑fidelity instantaneous creation—both will coexist.

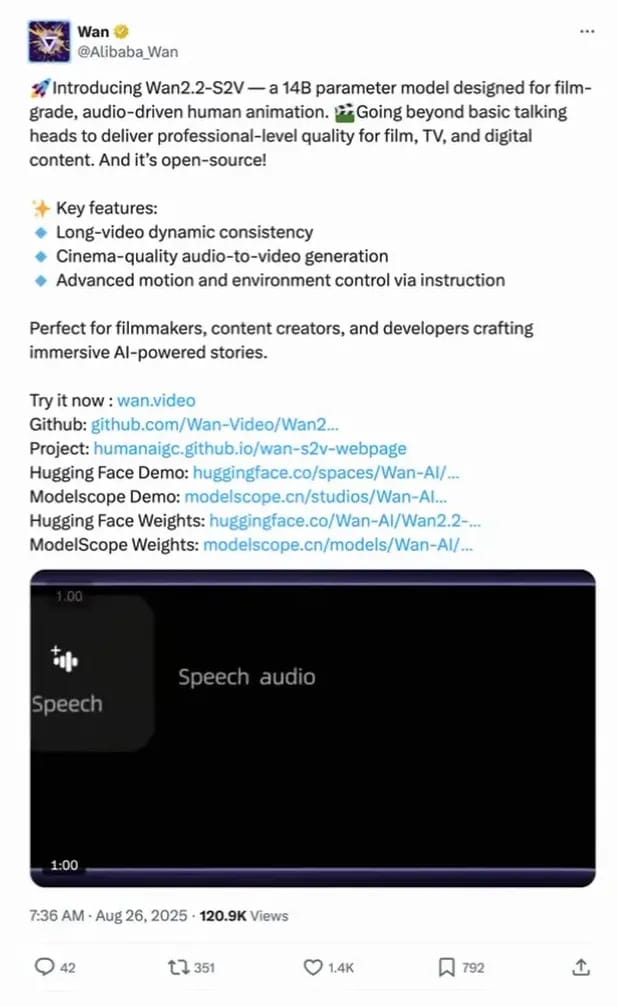

Wan2.2-S2V: Speech-driven avatar animation

Alibaba’s Wan2.2-S2V adds another model iteration focused on speech‑to‑video. Give it a static character image and an audio file, and the model generates believable lip‑sync and facial animation that matches the performance. Notably, the model can run locally and has ComfyUI integration, so developers and creators can test and incorporate it without cloud costs.

Production implications:

Rapid content generation: small studios and indie creators can produce talking characters and animated hosts quickly for promos, explainers, or low‑budget VFX tests.

Local workflows: running locally removes latency and privacy concerns, and it fits into offline editorial or on‑set review loops.

Audio remains key: these systems expect an audio input (human or TTS), so voice direction and performance still matter—the model amplifies, not replaces, the actor’s intent.

HunyuanVideo-Foley and the return of sound

One often-overlooked area in AI film tooling is Foley and environmental sound. HunyuanVideo-Foley analyzes footage and produces plausible Foley tracks—footsteps, cloth rustle, ambient interactions—generating the audio textures that sell a synthetic world.

Why this is worth attention:

Sound sells the image: even rough Foley can enhance believability and speed temp edits.

Lightweight inference: audio models are typically smaller and faster than video models, making on‑device deployment feasible.

Not production‑ready yet: the current output is promising but not feature‑film quality; expect rapid iteration in future versions.

Apple’s FastVLM & MobileCLIP2: Vision models on device

Apple quietly published FastVLM and MobileCLIP2 models on Hugging Face. These models enable real‑time video understanding and live captioning, and crucially, they run locally in a browser. For a company long criticized for slow public-facing AI efforts, this is a strategic pivot: smaller, optimized models that leverage Apple’s hardware to act as perceptual assistants.

Potential uses:

On‑device assistants: an iPhone camera paired with local VLMs could recognize locations, people, and objects and provide contextual recommendations—useful for location managers and on‑set coordinators.

Accessibility & live captions: filmmakers can get immediate interactive captions for dailies, playback, or director reviews without cloud processing.

Offline reliability: local models work without reception—handy on remote shoots or aboard aircraft where cloud services are unavailable.

Acer Veriton GN100 AI mini workstation: $4,000 AI desktop

Acer unveiled the Veriton GN100, a compact AI mini workstation built on NVIDIA’s GB10 SuperChip, priced at ~$4,000. Compact form-factor AI desktops are emerging as the baseline for teams that want local inference capability without provisioning full server racks.

Who benefits:

Developers & researchers: local prototyping, fine‑tuning, and inference for mid‑scale models.

VFX and post teams: when AI tools dominate edit and asset creation, local workstations reduce cloud costs and help maintain security and control.

Scale-up options: multiple Veritons can cluster to handle larger models—making the mini workstation a modular building block.

Adobe Premiere on iPhone: editing where creators live

Adobe brought Premiere to the iPhone with Firefly integration and generative SFX. This is a clear nod to mobile-native creators: vertical-first editors, social storytellers, and anyone who needs to cut quick, publish‑ready pieces on the go. For studios, it also serves as an entry path—young editors start on phone, graduate to desktop Premiere.

Practical notes:

Mobile-first editing: rich multitrack timelines and dynamic waveforms on a phone shrink turnaround time for short-form content.

Cloud sync potential: the real utility will be smooth Creative Cloud integration and two‑way project sync to desktop workflows.

Generative assets: Firefly and generative sound effects can speed placeholder creation and early concept reels.

HunyuanWorld‑Voyager expands the idea of world models by offering longer range traversal and direct 3D output (point clouds and scene exports). Instead of creating a short linear video, this system builds explorable environments that can export geometry for game engines or virtual production tests.

How productions might use it:

Rapid prototyping: blocked‑out worlds for previs and concept exploration without manual worldbuilding.

Game and sim integration: exportable point clouds let teams convert generated worlds into usable assets for gameplay or navigation tests.

Autonomy & robotics: long‑range, persistent scene generation has overlap with autonomous vehicle simulation and robotics research.

Google Flow: unlimited V3 fast for AI Ultra subscribers

Google Flow now offers unlimited V3 Fast generations for its $250/month AI Ultra tier. The strategy mirrors an effective workflow: iterate cheaply and quickly in a fast mode to find good prompts, then run the final, higher‑quality render. Making the fast mode unlimited reduces friction and wasted credits—encouraging experimentation.

Takeaways for filmmakers and small studios

The week’s developments accelerate three practical trends:

Democratized capture: lower-cost LiDAR and photogrammetry tools (PortalCam) mean more teams can bring accurate reference geometry into their pipeline.

Realtime ideation: realtime video generators and local VLMs let creatives iterate in minutes rather than days, unlocking new workflows for previs, performance, and interactive art.

Local inference & hybrid workflows: on-device models (Apple’s FastVLM, Wan models) plus affordable mini workstations shift heavy lifting toward local hardware—improving latency, privacy, and offline capability.

Final thoughts

This week shows the industry moving beyond proof‑of‑concept demos toward practical tooling. PortalCam suggests spatial capture will stop being a boutique service and become a daily production asset. Krea’s realtime video and Wan’s speech‑to‑video point to a future where iteration and character animation are immediate. Apple’s device‑friendly models and Acer’s mini workstation remind teams that running AI locally is increasingly realistic.

For filmmakers, the question changes from "Will AI help?" to "How will we re‑architect our process to take advantage of instant capture, fast ideation, and low‑latency on‑device inference?" The answer will come in new hybrid workflows: quick AI passes for ideation and temp elements, and human craft for final performance, color, and nuance. Sound will remain vital—Foley and ambient cues still sell an image more than pixels alone—and teams that pair strong audio design with these AI tools will get the biggest creative lift.