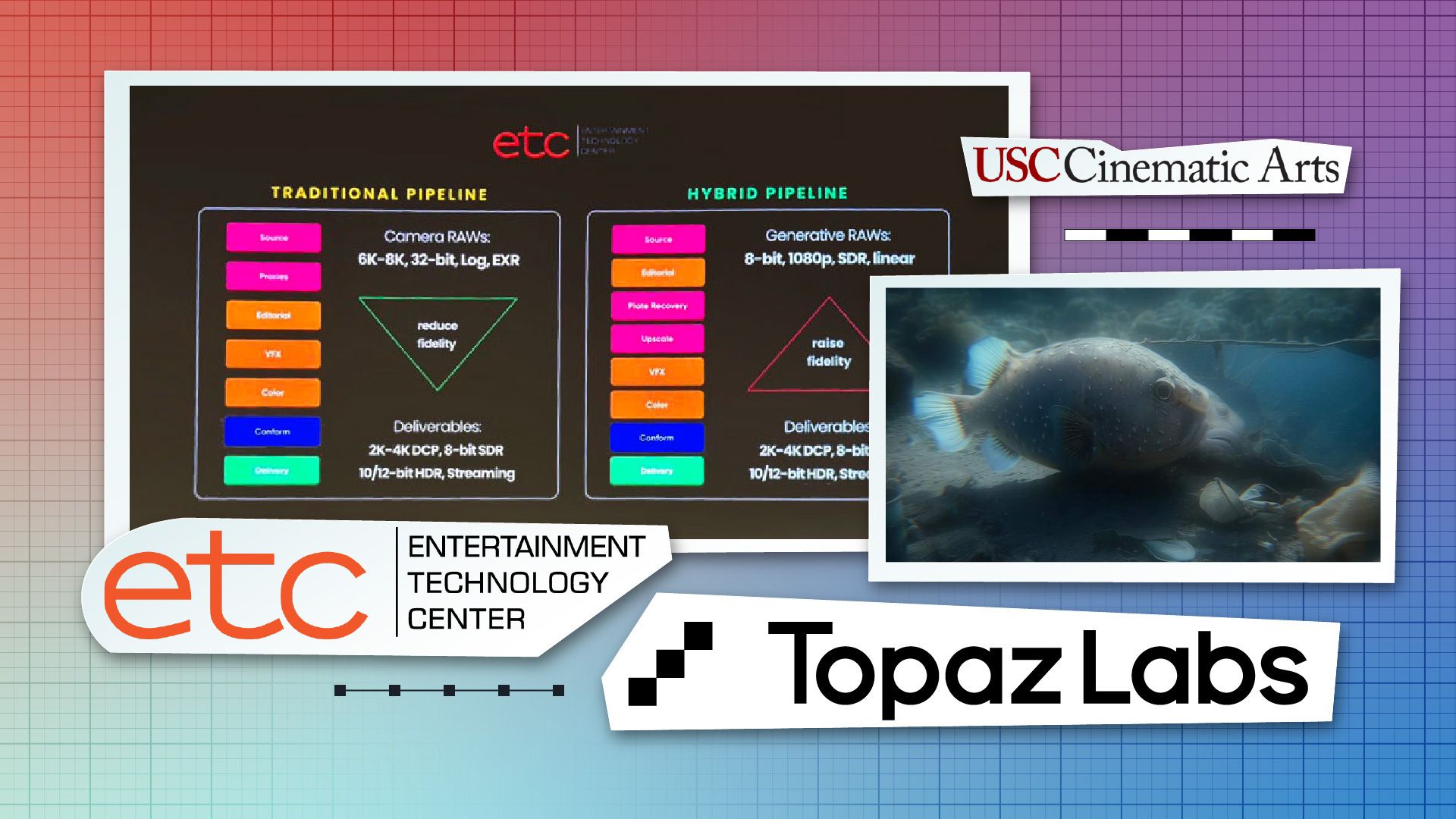

USC's Entertainment Technology Center developed a groundbreaking three-step pipeline using Topaz Video AI to transform compressed 8-bit AI-generated footage into 16-bit HDR EXR files suitable for theatrical screening. Their work on The Bends addresses a fundamental challenge: how to build fidelity UP rather than manage it DOWN in AI-generated content workflows.

The project demonstrates that while AI video models generate impressive content, their native outputs—720p H.264 files with 8-bit color depth—fall far short of cinema standards. USC's solution inverts traditional post-production, starting with low-fidelity source material and systematically adding professional-grade characteristics through carefully orchestrated upscaling steps.

Breaking the Quality Ceiling: Current AI Video Limitations Exposed

AI video generation models face a fundamental delivery problem. Native outputs typically arrive as 720p-1080p H.264 files in 8-bit SDR, covering only 35.9% of the visible color spectrum through the sRGB color space. These files contain compression artifacts, banding, and staircase patterns that become magnified when enlarged for theatrical presentation.

"We started with an 8-bit SDR, which is almost like your proxy," explains Sahil Lulla, Head of Innovation at Bitrate Bash and R&D lead on The Bends at USC's Entertainment Technology Center. "You have to raise fidelity through each downstream step."

This creates what USC calls the "inverted pipeline problem." Traditional filmmaking workflows start with high-fidelity camera originals—often 6-8K in 32-bit log color space—and reduce fidelity at each downstream step for final delivery. AI-generated content demands the opposite: building fidelity from limited source material to achieve cinema standards.

The team discovered that simply applying standard upscaling or color space conversions to raw AI footage produces broken, unusable results. Compression artifacts magnify during upscaling, and the limited 8-bit color information cannot support aggressive color grading without falling apart.

The Three-Step Solution: Topaz Models in Sequential Formation

USC's workflow employs Topaz Video AI's specialized models in careful sequence, with each step addressing specific technical limitations before proceeding to the next enhancement stage.

Step 1: Repair with Nyx Model

The process begins with Topaz's Nyx model, originally designed for denoising but repurposed for compression artifact removal. This step occurs at source resolution (720p/1080p) to address banding and macroblocking before any upscaling magnifies these problems.

"We found that Nyx, even though it's supposed to reduce noise, actually helped with debanding a lot better than debanding-focused models," Lulla notes. The team discovered that Nyx's smoothing algorithms treat compression artifacts similarly to noise patterns, effectively removing banding while preserving edge detail.

Step 2: Upscale with Gaia Model

After artifact repair, the Gaia model handles spatial resolution increase from 720p/1080p to 4K. USC selected Gaia specifically for The Bends after testing all available Topaz models—Proteus, Artemis, Theia, and their variants.

Gaia excels with organic textures and motion consistency, making it ideal for the film's underwater environments and blobfish character. The model outputs 4K 32-bit EXR files that, while still "referenced to 8-bit" in color gamut terms, provide the spatial resolution and file format structure needed for professional workflows.

Step 3: HDR Conversion with Hyperion Model

The final step employs Topaz's Hyperion model for SDR-to-HDR conversion, performing inverse tone mapping to expand the color gamut from Rec.709 to Rec.2020 and increase bit depth to true 16-bit HDR.

Hyperion uses AI-driven analysis to intelligently brighten highlights while recovering shadow detail, expanding the limited SDR range into professional HDR EXR files covering 75.8% of the visible spectrum. The result provides colorists with grading latitude approaching, though not equaling, traditional camera originals.

Lab Results: The Difference Between Broken and Cinema-Ready

The USC team demonstrated their pipeline's effectiveness through direct comparisons during their presentation at Infinity Festival. Side-by-side tests revealed dramatic improvements across multiple quality metrics.

When applying identical color transforms, source 8-bit footage fell apart immediately with crushed highlights and limited push/pull capability. The processed 16-bit version maintained integrity under aggressive grading, demonstrating significantly expanded latitude for creative color work.

Compression artifact analysis using negative space visualization showed the original footage contained extensive staircase patterns and macroblocking. After the three-step process, these artifacts were smoothed and homogenized, though not entirely eliminated.

File format progression: 720p H.264 → cleaned 8-bit → 4K 32-bit EXR → 16-bit HDR EXR

Color space journey: sRGB (35.9% spectrum) → Rec.709 → Rec.2020 (75.8% spectrum)

Professional compatibility: Final files suitable for DaVinci Resolve, Avid, and other cinema workflows

Fotokem colorist Alistair confirmed that the processed files behaved similarly to traditional camera footage, accepting film grain integration and supporting conventional grading techniques that were impossible with the original AI output.

Context Check: Where This Fits in the Current AI Video Landscape

USC's elaborate workflow arrives as the AI video generation landscape rapidly evolves. Luma AI's Ray3, released last month, represents the first model to generate native HDR video output, supporting 10-bit, 12-bit, and 16-bit workflows with professional ACES2065-1 EXR format.

Ray3's native HDR generation validates USC's approach while potentially eliminating the need for complex multi-step workflows in future projects. However, USC's testing revealed that current native HDR models still exhibit flickering in stable shots and tone mapping issues in professional color grading environments.

"Native HDR generations aren't fully there just yet," Lulla observes. "But what Luma has done has pushed the needle in that direction." The team estimates 1-2 years before native HDR generation reaches theatrical-quality standards without additional processing.

Meanwhile, other AI video quality challenges persist across models: temporal flickering, limited scene duration (typically 8-12 seconds), and consistency issues across cuts within single scenes. These limitations require traditional VFX techniques and compositing to achieve the multi-angle coverage and extended sequences needed for narrative filmmaking.

Production Reality Check: The Labor-Intensive Truth Behind AI Filmmaking

The Bends production revealed that current AI video workflows are significantly more labor-intensive than traditional post-production. Each shot required individual model selection and parameter tuning, with some complex emotional expressions demanding 2,000-3,000 generation credits and extensive iteration.

The team developed novel solutions for character consistency challenges. Since blobfish are poorly represented in AI training datasets, they licensed images from an oceanic institute and converted 2D reference materials into 3D models using tools like Meshy. These synthetic datasets enabled custom LoRA (Low-Rank Adaptation) training for the specific character requirements.

Multi-action scenes proved particularly challenging. Shots requiring one character to remain static while another swooped through the frame could not be achieved through prompting alone, requiring "heavy use of compositing and Photoshop" with separate layer generation for each element.

Roberto Schaefer, the project's cinematographer, noted that AI-generated content often appears "too clean" and "plastic looking." The solution involved adding traditional film grain and planning digital diffusion filtering to soften the overly crisp AI aesthetic—techniques familiar from the difference between shooting with clean lenses versus Hollywood Black Magic or Low Con filters.

Final Frame: Building the Bridge to AI Cinema's Future

USC's Entertainment Technology Center has proven that cinema-ready AI content is achievable today with proper pipeline planning, tool selection, and collaborative workflows between technologists and traditional post-production artists. Their three-step Topaz approach provides a practical blueprint for filmmakers ready to integrate AI-generated content into professional productions.

The work validates two critical insights: current AI video models require sophisticated finishing workflows for theatrical presentation, and the industry is rapidly approaching native professional-quality output. While elaborate multi-step processes may become unnecessary within 1-2 years as models like Ray3 mature, USC's approach demonstrates that the cinema barrier for AI content has been crossed.

As Sahil Lulla and his team prepare to share their full methodology, they've established a reproducible framework that transforms AI video from compelling demos into cinema-ready masters. The question is no longer whether AI-generated content can reach theatrical standards, but how quickly the tools will evolve to make these complex workflows obsolete.