Joey and Addy take readers on a fast-paced tour of this week’s most consequential AI advances for film production, virtual production, game development and creative workflows.

The roundup covers a suspected new Google image model called Nano Banana, a fresh generation of editable world models from Tencent and Skywork, character animation advances from Alibaba, major research and demos from SIGGRAPH (including NVIDIA’s GAIA), prototype VR headsets from Meta, practical workflow tools from fal and Autodesk, and a handful of fun and useful side projects like a color grading game.

This article summarizes those developments, explains their technical and creative implications, and offers a practical lens for filmmakers, VFX artists, and game folks who want to understand where AI is headed.

Nano Banana: the mysterious image model

One of the week’s buzziest items is Nano Banana, an image model that surfaced on LM Arena and quickly trended on social platforms. While not officially confirmed, many suspect it’s a new Google model—either a sibling to or replacement for Imagin. What makes Nano Banana notable is its world understanding and its ability to perform accurate prompt-based modifications from plain text without complicated masks or splines.

Examples show impressive consistency: the model can take a portrait and generate a passport-style photo or change head orientation while keeping facial identity intact. Observers noted it behaves more like a system that “understands” the scene, rather than merely upsampling or blending large datasets. That suggests new architectural choices under the hood—possibly alternatives to classical diffusion processes.

There was also an interesting aside about how quickly the internet reacts: a domain (nanobanana.ai) was purchased, and a quick “Nano Banana” web UI popped up—likely a third-party app using existing APIs. It’s a reminder of how fast entrepreneurial devs can wrap a UX around a trending model and ship a product within a day.

Technical note: diffusion vs. line-scan generation

Some demos hinted at generation methods outside the typical diffusion paradigm: substitutes that render images more like a line-scan or pixel-by-pixel printer matrix. If Nano Banana or similar models adopt such methods, it would be a notable shift in the image-generation architecture and could explain cleaner localized edits and improved consistency.

World models: from Genie 3 to Yan and Matrix-Game 2.0

After the attention around Genie 3, a slew of world model announcements shows the space is heating up. Two major entries to watch are Tencent’s Yan and Skywork’s Matrix-Game 2.0.

Yan (Tencent): edit the world in real-time

Yan markets itself as foundational interactive video generation and comprises three modules: YanSim (simulation), YanGen (generation from text/images), and YanEdit (real-time editing). Key features include:

Persistent worlds: what Yan generates can remember previously created objects and states when revisited.

Real-time multi-granularity editing: add walls, change styles, or adjust structure while inside the world.

Performance claims: 1080p at 60fps for game applications, which positions Yan toward AAA use-cases.

Perhaps the most interesting technical detail is the use of a 3D Variational Autoencoder (3D VAE). Instead of decoding to 2D images, the model decodes to 3D latent representations—assets that can blend into traditional 3D pipelines like Unreal, Unity, or Maya. That bridge between latent-space generation and mesh-compatible outputs could be a game-changer for persistent worlds and for integrating AI-generated content into existing production pipelines.

Skywork’s Matrix-Game 2.0: open-source long-sequence interactive worlds

Skywork’s Matrix-Game 2.0 claims to be the first open-source real-time long-sequence interactive world model—designed to generate minutes of continuous interactive video at ~25 fps. One of the limitations with earlier world models (including early Genie 3 demos) was sequence length and memory persistence. Skywork aims to stretch those bounds and provide a tool that runs for longer continuous sequences while remaining interactive.

Open-source releases like this matter because they democratize experimentation and accelerate adoption. They also give researchers and developers a shared baseline to innovate on—resulting in faster productization.

Hunyuan / Hyan GameCraft and Chinese momentum

Tencent’s Hunyuan GameCraft was open-sourced this week as well. China’s big players—Tencent, Alibaba, ByteDance—are rapidly producing solid models, often releasing freely to encourage adoption. The strategy appears to be wide distribution followed by integration into platforms (for example, content pipelines inside short-video apps). Several AI researchers have noted the technical strength of these Chinese models, and local, runnable models like Wan 2.2 (run locally via ComfyUI) continue to gain traction for cost-effective video generation workflows.

Character animation: FantasyPortrait and multi-character driving

Alibaba’s FantasyPortrait aims at a persistent pain point: multi-character portrait animation. While many character-driving tools handle a single face well, compositing two or more driven faces usually requires separate passes and traditional compositing. FantasyPortrait demonstrates multi-character portrait animation from both image and video driving sources, and the researchers published code on GitHub.

Practical implications:

Interviews, debates, and split-screen scenes where multiple talking heads must be animated could be driven in a single pass.

It broadens possibilities for controlled, stylistic portrait animations and even for animals (talking pets), but also heightens deepfake concerns.

SIGGRAPH highlights: NVIDIA’s GAIA, Cosmos, and generative avatars

SIGGRAPH remains a critical conference because research papers shown there often translate into product features months later. This year’s highlights included NVIDIA’s updates:

Cosmos — a world model focused on automation, infrastructure, and robotics (autonomous vehicles, physical AI).

GAIA — a generative animatable avatar pipeline built using Gaussian splats (gajon splats) that lets users tweak sliders to control expressions, parallax, and perspective. The results are impressive and hint at more lifelike volumetric avatars.

GAIA in particular suggests near-term applications for higher-quality video conferencing, metaverse avatars, and lightweight digital humans for live streaming and crowd generation. While rigged, skinned, and simulation-driven digital humans will remain the standard for major character work, GAIA-level approaches are likely to be useful for background characters, streaming avatars, and immersive conferencing.

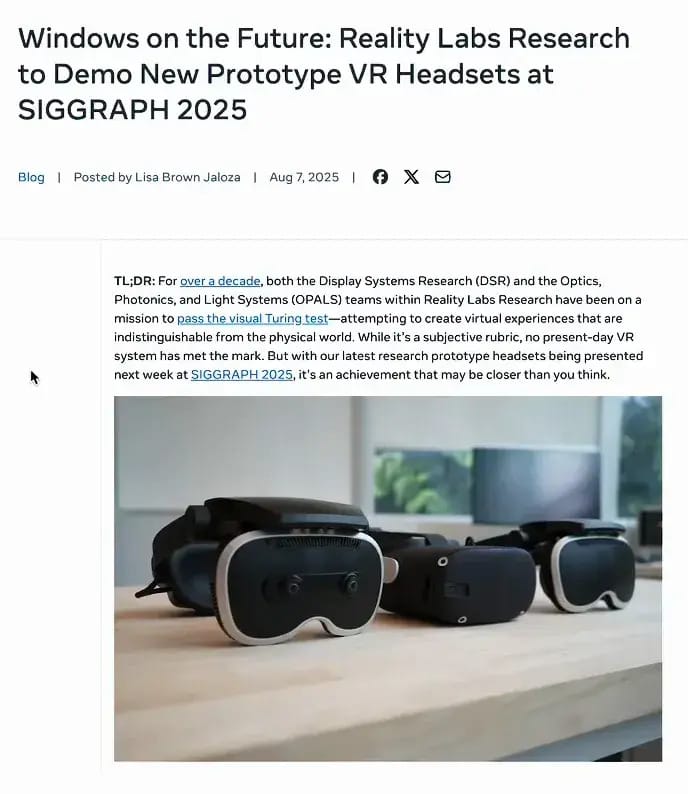

VR hardware: Meta’s Tiramisu & Boba prototypes

Meta unveiled prototype headsets—Tiramisu and Boba variants—claiming substantially higher contrast, wider field-of-view, and higher "per-degree" pixel density. The industry’s goal remains achieving “retina” displays on headsets (making pixels indistinguishable at typical viewing distances). That requires extreme pixel counts (8K–16K hypothetical per eye) at short optical distances, so demos remain prototypes for now.

If paired with sophisticated volumetric avatar tech like GAIA, these headsets could reshape remote collaboration (think Project Starline-level immersion available to more users), but practical adoption remains constrained by comfort, ergonomics, and cost.

Tools and workflow updates: SkyReels, Pika, fal Workflows 2.0, and Autodesk Flow Studio

On the tooling side, several updates are worth noting:

SkyReels — another audio-to-character animation system with a mixed demo quality; useful to keep on the radar for specific projects that need longer sequences.

Pika — rolling out character animation updates in their app focusing on mobile/social creators rather than desktop pro tools.

fal Workflows 2.0 — a canvas-based editor that allows drag-and-drop node-based workflows connecting fal-hosted models. It fills a gap between technical node environments (Comfy UI) and accessible cloud workflows.

Autodesk Flow Studio (freemium) — formerly Wonder Dynamics, now a freemium offering inside Autodesk’s stable. A free tier with limited monthly credits makes body/character replacement and rigging-based workflows more accessible; tiered pricing addresses adoption and recurring-revenue concerns.

These tools show the industry moving toward lower barriers to entry: canvas UIs, cloud APIs, and productized AI workflows that let creators iterate rapidly without managing GPU infra.

Small but practical updates: Gemini memory and Match the Grade

Google’s Gemini rolled out a memory feature that persists context across sessions; Claude added similar memory last week. Memory transforms chat-based workflows from ephemeral Q&A into true project assistants that remember ongoing constraints, preferences, and prior work—critical for long-term production workflows and creative continuity.

On the lighter side, colorist Tobia Montanari Lughi released Match the Grade, a little game that challenges users to match a target color grade using sliders—great training for colorists and for anyone who wants to sharpen grading instincts. It’s a small example of a well-targeted tool that trains practical skills and is enjoyable enough to encourage repeated practice.