First up: Seedream 4.0. In this week’s episode of Denoised, Addy and Joey break down ByteDance’s latest model and compares it to the Nano Banana workflow that many filmmakers have leaned on. The roundup moves through avatar video tools, ComfyUI’s new cloud offering, an enterprise-ready music model, new cameras from RED and Nikon, and a couple of industry M&A and pipeline partnerships worth noting.

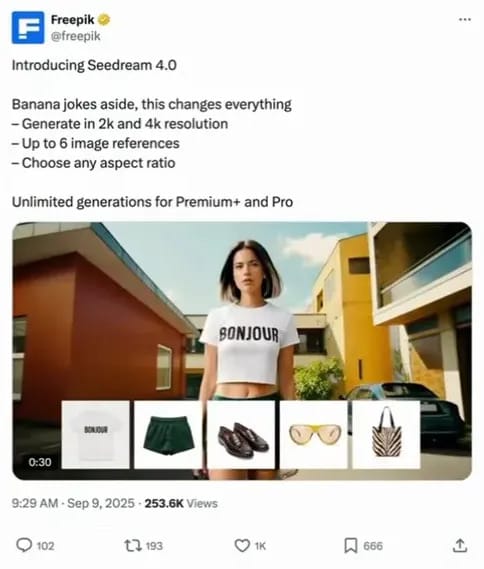

Seedream 4.0 from ByteDance

Seedream 4.0 landed with several practical features that will matter to filmmakers and content creators. Joey highlights the model’s strong adherence to text prompt edits, the ability to accept up to six reference images, and the option to generate 2K and 4K outputs. Those higher resolutions and reference-image support make it easier to iterate on consistent visuals.

Addy also calls out Dreamina, ByteDance’s web portal for accessing their models. For users who only want ByteDance models through a web interface rather than an API, Dreamina serves as the central portal.

Why it matters

Higher-resolution outputs (2K to 4K) reduce the gap between quick concept renders and usable production visuals.

Multiple reference-image inputs let filmmakers push for more consistent character and environment renders.

The web portal option shortens the friction for non-developers who want to test models without wiring up APIs.

Seedream vs. Nano Banana

The hosts compare Seedream to Nano Banana. Joey describes a mixed result: sometimes Seedream did the job, sometimes Nano Banana still produced the more consistent 3D spatial results. Nano Banana kept geometry and screen details sharper in their tests, while Seedream excelled in batch generation—producing many variations within the same latent space for stronger internal consistency within a single generation.

Joey emphasizes the practical difference: Nano Banana felt stronger when the task required consistent 3D composition across shots (for example, maintaining geography and perspective for over-the-shoulder shots). Seedream’s ability to generate multiple outputs in one batch made it useful for ideation, storyboarding, and synthetic-data generation where consistent variants are required.

Key takeaway

Use Nano Banana for spatial consistency and detailed overlays (like readable computer screens).

Use Seedream for fast ideation and generating many consistent thumbnails in a single pass.

VEED Fabric 1.0 — Talking Avatars

Veed has introduced Fabric 1.0: a talking-avatar model that turns a single image and an audio track into up-to-a-minute talking videos. Joey compares the output quality to existing tools in the user-generated content and explainer-video space and notes that it looks comparable to offerings like HeyGen.

The hosts point out that these avatar tools are targeted at short-form creators and marketing use cases—B-roll, explainer scenes, or quick spokesperson clips. VEED’s broader browser-based editing suite (captions, audio cleanup) makes Fabric a natural fit for creators who already use Veed for rapid social production.

Practical notes

Expect simple workflows: upload an image, supply audio, and get a talking clip back.

Performance-driven puppeteering (capturing a human actor and using that performance to drive an avatar) is still more advanced in other tools like Runway, which can map real human performance to an avatar’s motion.

Comfy Cloud — ComfyUI in the Cloud

ComfyUI announced Comfy Cloud: the ability to run ComfyUI in a cloud environment. Joey frames this as the logical next step for node-based, visual AI pipelines — especially for users who lack high-end local GPUs and elastic scaling.

Comfy Cloud gives users access to large GPU backends (H100-class machines), shared nodes, and prebuilt community workflows without the local-storage headaches of large model files. The hosts discuss uncertainty around pricing and storage, but stress the value: an easier onramp to complex node setups and access to nodes others have built.

Why filmmakers should care

Anyone running multi-step AI workflows (pose tracking, camera tracking, masking, color transforms) benefits from cloud GPUs and shared nodes.

Comfy’s node library is useful for rapid prototyping of VFX and concept pipelines.

Comfy Cloud reduces the hardware barrier for teams or solo creators who need scale temporarily.

Stable Audio 2.5 from Stability

Stable Audio 2.5 arrives as an audio model trained on licensed music, designed for professional and enterprise use. Joey highlights that the model is tuned to produce production-ready background music quickly and supports audio in-painting (editing an intro, middle, or outro of a generated track).

Generation speeds are fast—a 30-second track can be produced in seconds—making it a practical tool for filling out video timelines with tailored music. The hosts note that at present Stable Audio is delivered via API/cloud rather than a downloadable local model, but the runtime is lightweight enough that local implementations may be possible later.

How production teams can use it

Quickly generate tailored background music for rough cuts and temp tracks.

Use audio in-painting to refine segments without redoing entire soundbeds.

For documentary or longform work, Stable Audio’s musician-focused prompt vocabulary supports precise musical direction (tempo, instrumentation, phrasing).

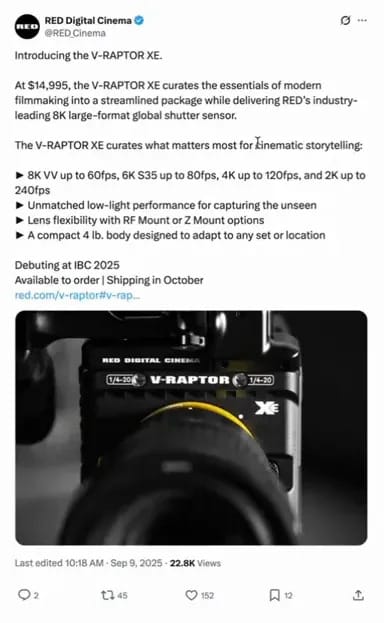

RED V-RAPTOR XE

RED added the V-RAPTOR XE, a lighter-weight 4-pound variant priced just under $15,000. Joey explains RED now has the lineup to span high-end feature cameras down to compact, field-ready bodies—three V-RAPTOR sizes plus Komodo variants.

The XE keeps RED’s sensor performance but in a smaller, more affordable package. Joey positions it as a bridge between A-cam quality and run-and-gun accessibility—suitable for drone or vehicle mounts where weight and footprint matter. RED also adopted Z-mounts on recent bodies, improving lens compatibility for cinematographers invested in Nikon glass.

Filmmaker implications

Smaller RED bodies mean crews can access RED color science and raw formats without the logistical footprint of larger rigs.

High-frame-rate options (for example 240fps at 2K) and improved low-light performance remain key differentiators against smaller cinema cameras.

Nikon ZR and small cinema DSLRs

Nikon released its first camera after acquiring RED, the ZR, and Canon responded with smaller C-series bodies in roughly the same handheld form factor. Joey notes these cameras aim at the compact cinema market alongside Sony’s FX3—small bodies with cinema features like genlocking and internal RAW options.

These bodies are practical choices for mixed crews who need a compact primary or B-camera that still integrates into color-managed, RAW-based pipelines.

Bending Spoons Acquires Vimeo

Bending Spoons confirmed the acquisition of Vimeo for $1.38 billion. Joey and the hosts unpack the transaction and suggest the value is less about Vimeo’s consumer-facing business and more about its video-delivery technology, live-stream stack, and CDN capabilities.

The hosts point out Bending Spoons already owns a slate of app and utility businesses. For filmmakers and platform operators, the move signals interest from consolidators in video infrastructure and content tools.

What to watch for

How the new ownership repackages Vimeo’s products: platform, live streaming, and studio tools.

Potential changes to pricing or enterprise focus that could affect creators who use Vimeo as a portfolio and delivery tool.

Volinga and XGRIDS Partnership — Scanning to Unreal

The episode closes on a virtual production pipeline update. Volinga’s Unreal plugin now integrates with XGRIDS’ PortalCam scanner to move LIDAR and RGB captures into Gaussian Splats and then into Unreal. Joey frames this as a practical bridge: capture, color-manage, then push to an LED volume for virtual production.

That color-management and depth-aware capture matter: consistent input leads to predictable output on LED walls, and depth plus point-cloud data make the splat-based assets more useful for interactive and live setups.

Practical benefit

Remote or location scans can be stitched into Unreal with color fidelity and depth info, shortening the time from capture to usable in-camera backgrounds.

Teams running LED volume shoots get a more reliable starting point for photoreal background plates.

Closing thoughts

The episode underscores a pattern: tools are getting easier to access (cloud UIs, web portals, small cinema bodies), while the workflows that matter most to filmmakers—consistent spatial outputs, performance-driven puppeteering, color-managed capture—remain the key differentiators.