In this week’s episode of Denoised, Addy and Joey break down Kling O1’s multimodal video-to-video capabilities and how it stacks up against Runway Aleph and other tools. The hosts then move through a fast roster of releases—Kling 2.6, Alibaba’s Z-image, Runway Gen-4.5, Seedream 4.5, LTX Retake, FLUX.2, and TwelveLabs’ Marengo 3.0.

Kling Omni O1: video modification that feels more usable

Kling O1 (Omni) arrives as a true multimodal video-to-video tool. It accepts existing footage and lets users direct edits—remove objects, swap people, change background scenery, or composite new elements from still images. Upload limits are reported at 200 MB and 2K inputs. Outputs appear to be native 1080p or close to it, which matters because many models still effectively deliver upscaled 720p results.

The hosts emphasize that Kling’s outputs look sharper and more consistent than some earlier tools. For filmmakers that need controlled modifications of existing shots—think object removal or plate replacements—Kling is now among the most applicable options alongside Aleph and Luma Modify.

What this means for productions

Practical VFX in post: Useful for insert fixes and localized changes without re-shooting entire plates.

Resolution caveat: Expect compressed, delivery-ready clips—still not equivalent to raw camera files for high-end color workflows.

Workflow tip: Use Kling when you want precise modifications to an existing take rather than generating new scenes from scratch.

Kling 2.6 and audio generation

Kling 2.6 adds audio generation to the toolkit. Early demos show it can synthesize character voice lines, but the hosts note an uncanny valley in some examples. Voices are workable for rough cuts and concept demos, but not yet a substitute for performance capture or professional ADR.

Filmmaker takeaway

Audio generation is arriving, but filmmakers should treat it as a draft tool. Use it for temp tracks and early rehearsals. Always plan human-in-the-loop steps for final voice work.

Video models are splitting into two camps

The conversation highlights a current fork across video models: one path focuses on text-to-video and image-to-video generation (Gen 4.5, Veo 3.1, etc.), the other focuses on modifying existing footage (Kling O1, Luma Modify, Aleph). Each approach has distinct uses in production. Generation is great for concept visuals, previs, and new shots. Modify-workflows are immediately practical for VFX fixes and editorial timing adjustments but bring compression and fidelity tradeoffs.

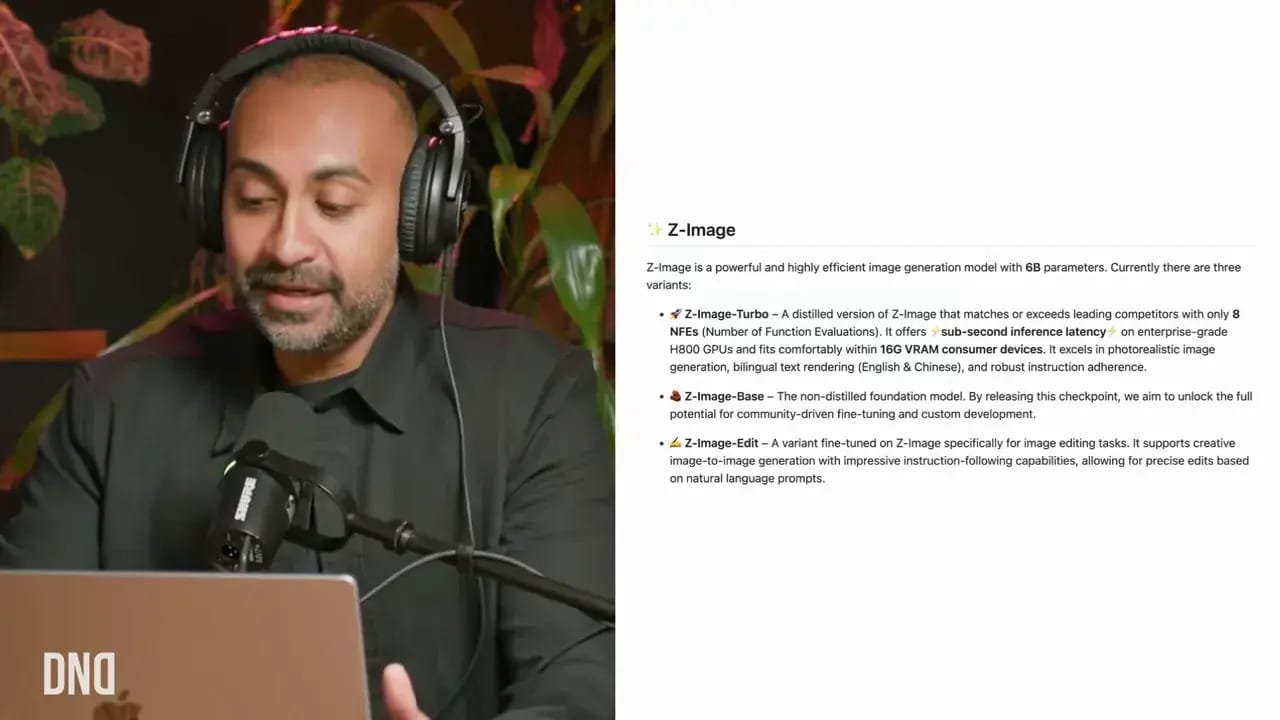

Z-image (Alibaba): an open-source SDXL successor

Alibaba’s Z-image is being embraced as an open-source alternative that gives creators the same kind of extensibility SDXL once offered. The model comes in three variants—Turbo (distilled, consumer friendly), Base (foundation), and Edit (image editing)—and is already appearing across Comfy, Replicate, and cloud providers.

Notable workflows include fine-detail enhancement and targeted image modification. The hosts point out that Z-image can be used as a second-pass filter to remove the plasticky sheen found in some AI outputs, improving skin and material realism more effectively than naive upscalers.

Why filmmakers should care

Open tuning: Run local experiments, build and share LoRAs, and integrate the model into custom pipelines.

Lightweight options: Turbo enables experimentation on lower-end GPUs, lowering the barrier for offline testing.

Use case: Image cleanup, plate enhancement, and prepped assets for look development.

Runway Gen-4.5: better physics, still a UX question

Runway’s Gen 4.5 shows improved real-world physics and reflection handling in demo reels—shots such as mirror flips and paint interactions are getting more believable. The hosts point out the ambition behind Runway’s product road map: the company frames this work as building a broader world model that could one day power many forms of media and interaction.

On the flip side, Runway still requires more generation attempts for usable outputs compared with some competitors. For filmmakers prioritizing first-pass efficiency, other models may deliver faster results.

Seedream 4.5: batch consistency and 4K output

Seedream’s 4.5 by ByteDance doubles down on multi-image batch generation. The key differentiator is producing multiple images in the same latent space in a single pass, which simplifies creating consistent character sheets, shot variations, and multi-view assets without stitching disparate generations together.

The model also supports 4K-native outputs, which matters when pixel-level detail is required for marketing stills or close-up VFX elements.

How creators will use it

Character and look dev: Generate multiple consistent angles and expressions in a single generation.

E-commerce and product photography: Preserve small text and material details for catalogs.

LTX Retake: in-place clip edits

LTX introduced a Retake feature that allows marking an in and out point on an existing clip and regenerating only that segment, leaving the surrounding footage intact. This is a targeted inpainting workflow inside the timeline, enabling micro-adjustments—tightening pacing, rephrasing delivery, or swapping a brief action—without reworking whole takes.

The hosts flag quality limitations in early demos and raise the obvious ethical point about altering performances. Technically, however, Retake reduces editorial friction and could save production time when directors want to tweak timing or delivery in post.

FLUX.2 from Black Forest Labs: a heavy, high-fidelity option

FLUX.2 aims at the same high-fidelity space as some proprietary models. The main drawback for smaller teams is model size and inference requirements—FLUX.2 Pro can demand 90 gigabytes of VRAM, effectively relegating it to cloud A100-class instances. Despite that, early comparisons place its image-modification quality near top-tier paid models.

For facilities that can afford cloud GPU time and want deep customization through LoRAs and custom pipelines, FLUX.2 is worth testing as a near-proprietary-quality open architecture.

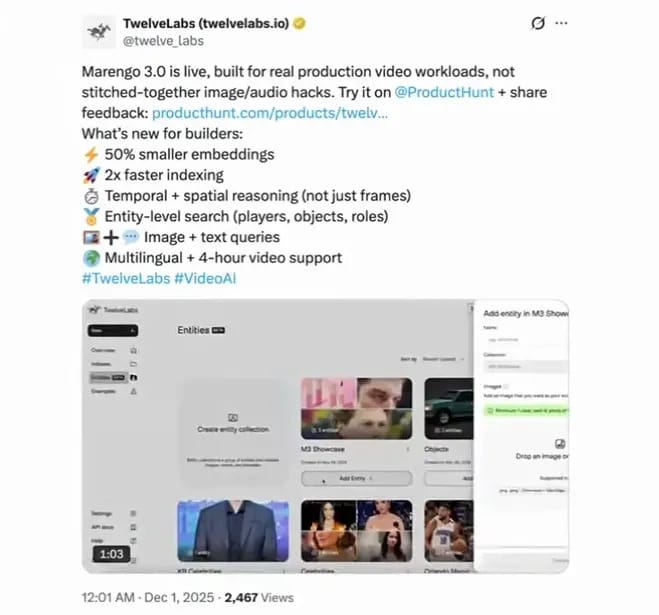

TwelveLabs Marengo 3.0: better eyes on your library

TwelveLabs released Marengo 3.0, a video-understanding model focused on smaller embeddings, faster indexing, and temporal and spatial reasoning. Where generative models create new content, Marengo helps teams extract value from existing video libraries—searching, contextual indexing, and enabling agent-like workflows that require an understanding of motion, objects, and on-screen text.

This class of model becomes the “eyes and brain” for production tooling: searchable archives, editorial assistants, and media asset management driven by more accurate scene-level awareness.

Closing notes and practical guidance

This week’s releases show two clear trends: tools for modifying existing footage are gaining real practical traction, and open-source image models are back in the spotlight with stronger, tweakable options. Filmmakers should think in terms of tradeoffs.

Choose per task: Use text-to-video models for concept and previs. Use modify workflows for plate fixes, inserts, and editorial tightening.

Manage fidelity: Expect AI-generated or modified clips to need bridging steps—upres, color grading, and compositing—to fit into high-end pipelines.

Experiment locally: Open models like Z-image and FLUX.2 (when cloud-accessible) allow teams to prototype custom LoRAs and integration points.

Plan ethics and consent: Tools that alter performances or dialogue require clear permission and production policies.

The bottom line for production teams is simple: more realistic choices are now available, but each model brings limits and tradeoffs. The right approach is to experiment with a few promising tools, integrate their outputs into established post pipelines, and reserve human oversight for final delivery quality and consent-sensitive decisions.