In this week’s episode of Denoised, Addy breaks down a practical, start-to-finish AI film pipeline used to create a short cinematic western sequence. The rundown moves through the toolset choices, the creative tests that shaped the approach, and the production steps a filmmaker would need to reproduce similar results: character generation, background creation, compositing, animation, grading, and audio. Key tools covered include Nano Banana Pro, Wan 2.2 Animate, ComfyUI and Comfy Cloud, DaVinci Resolve, and Suno for music and voice.

What Addy frames as the main goal

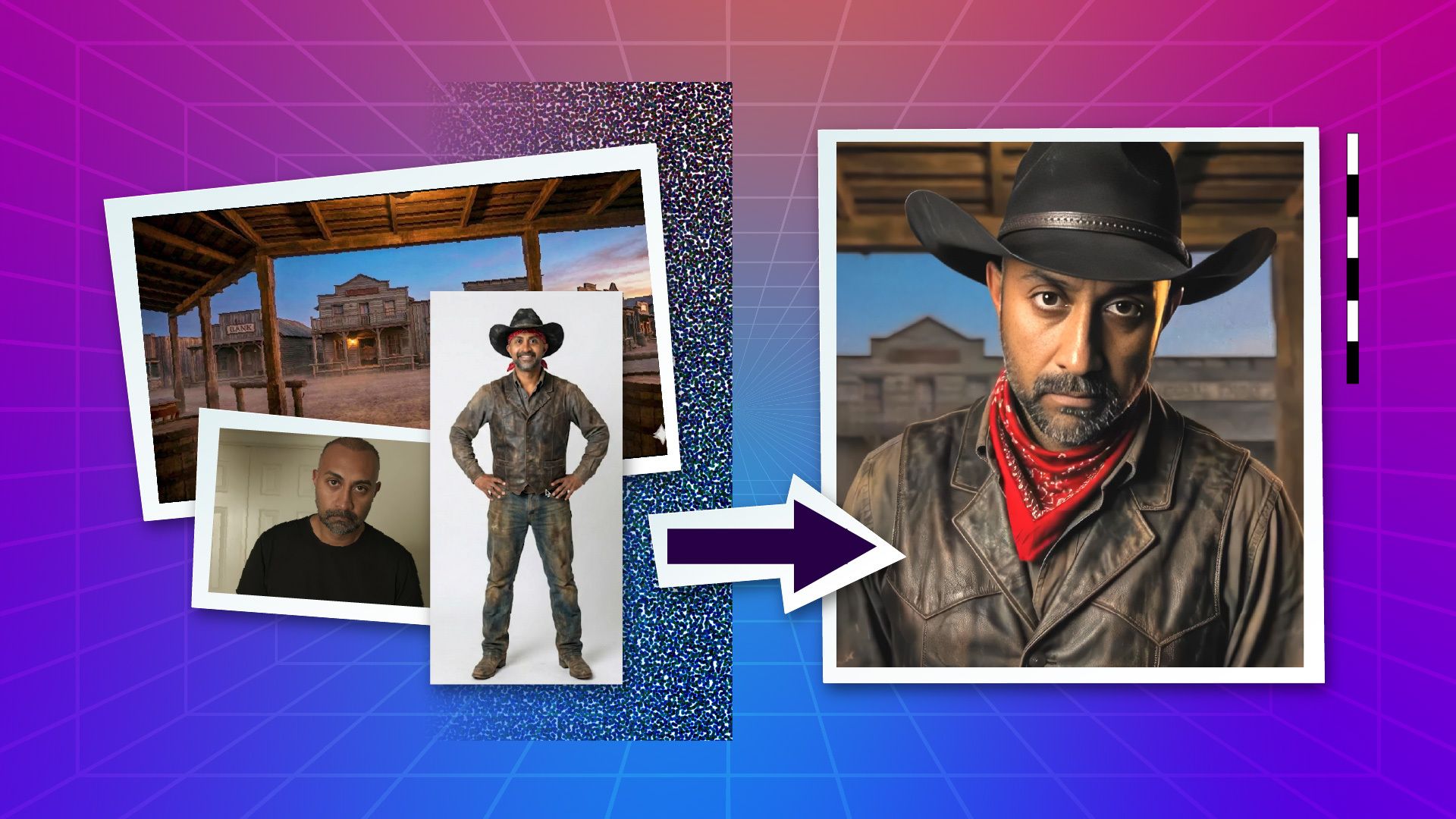

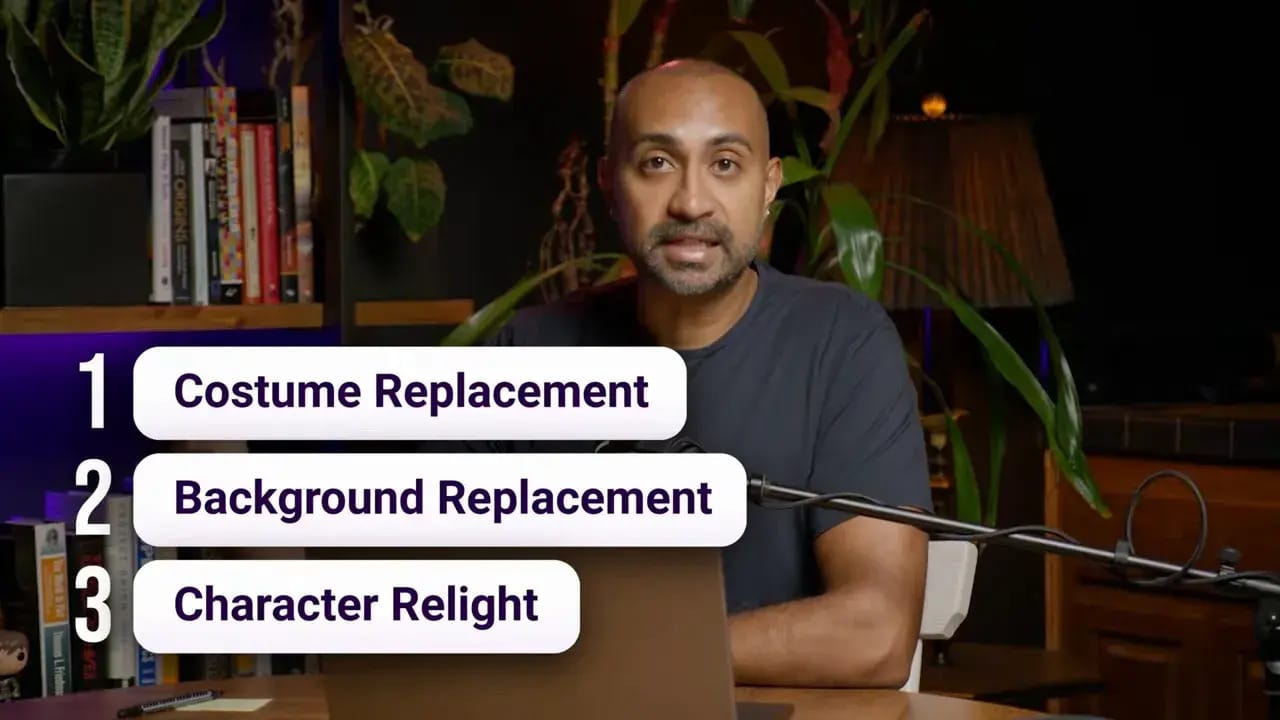

The primary objective was straightforward: replace costume and background, then relight a real human performance so it sits convincingly in a new environment. Addy emphasizes three classic VFX tasks—costume replacement, background replacement, and relighting—and tests how far modern AI tools can take those tasks while preserving authentic human nuance.

Tools and workflow overview

Addy lays out the production stack early on. The image generation and compositing were done with Nano Banana Pro, image-to-video with Veo 3.1, and the actual per-frame video generation came from an open source model, Wan 2.2 Animate. Compositing and color work used DaVinci Resolve (plus Lightroom for stills and Premiere for edits when needed). Music and a rugged voice were created in Suno. For processing, ComfyUI is used locally and fal.ai is used when higher-end GPUs (A100, H100) are required.

Start small: a single test shot

Rather than attempting an entire sequence right away, the workflow begins with a compact test. Addy shot a low-angle living-room performance and iterated the costume and lighting with Nano Banana until the look felt right: a black cowboy hat, red bandana, and the smoke-and-glow of a cigar (without actually smoking one on set). This single-image test revealed a limitation: pure image-to-video models can invent motion in ways that break continuity—case in point, a cigar that disappears and reappears mid-shot.

Keep the human performance as the backbone

Addy argues that retaining a real human performance as the input significantly improves the final result. Rather than remapping the performance to a different actor (which introduces retargeting errors), the workflow uses the same person as both input and target. This minimizes dimensional mismatch and preserves subtle performance details—hand gestures, timing, facial micro expressions—that sell realism.

Addy explains the concept of retargeting from motion capture: it’s math-heavy and lossy. By keeping the geometry consistent, the AI has less to “guess,” leading to outputs that better retain the original physicality.

Asset building: character and background

Asset creation is separated into three tasks: character generation, background generation, and compositing. For the character, Addy produced four views—front, 3/4, back, and side—so the models get maximum reference information for poses that originate in those angles. Providing multiple consistent views reduces the chance of jarring artifacts when the subject turns or moves.

For the environment, Addy iterated on the saloon exterior until the composition supported believable lighting. The goal was a single hero lamp that could act as a local light source to relight the actor convincingly. Iterations included widening the patio and adding distant city blocks to create depth and continuity between reverse angles.

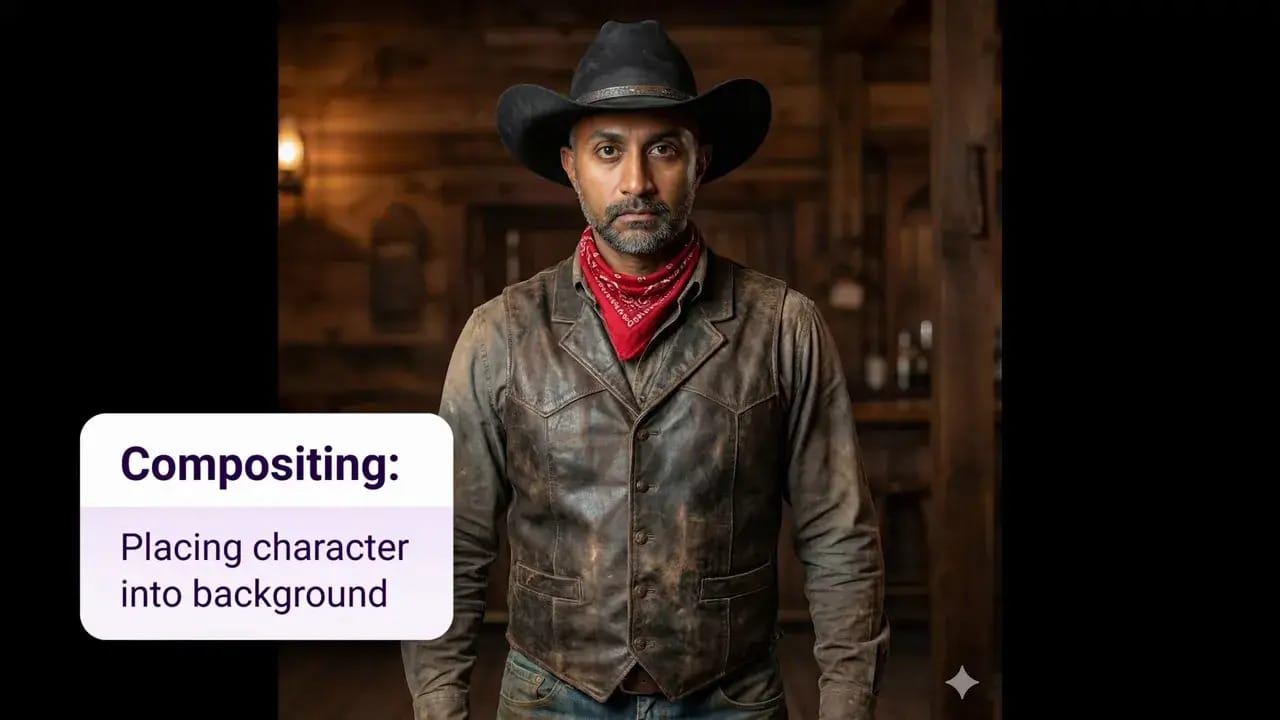

Compositing strategy and creative iteration

Compositing isn’t just technical; it’s creative iteration. Addy shows several generations where costume and lamp placement shift slightly, and picks the version that best sells the mood. Small decisions—moving a bandana from forehead to neck, dirtying a shirt, dialing lamp intensity—have an outsized effect on believability when the clip is in motion.

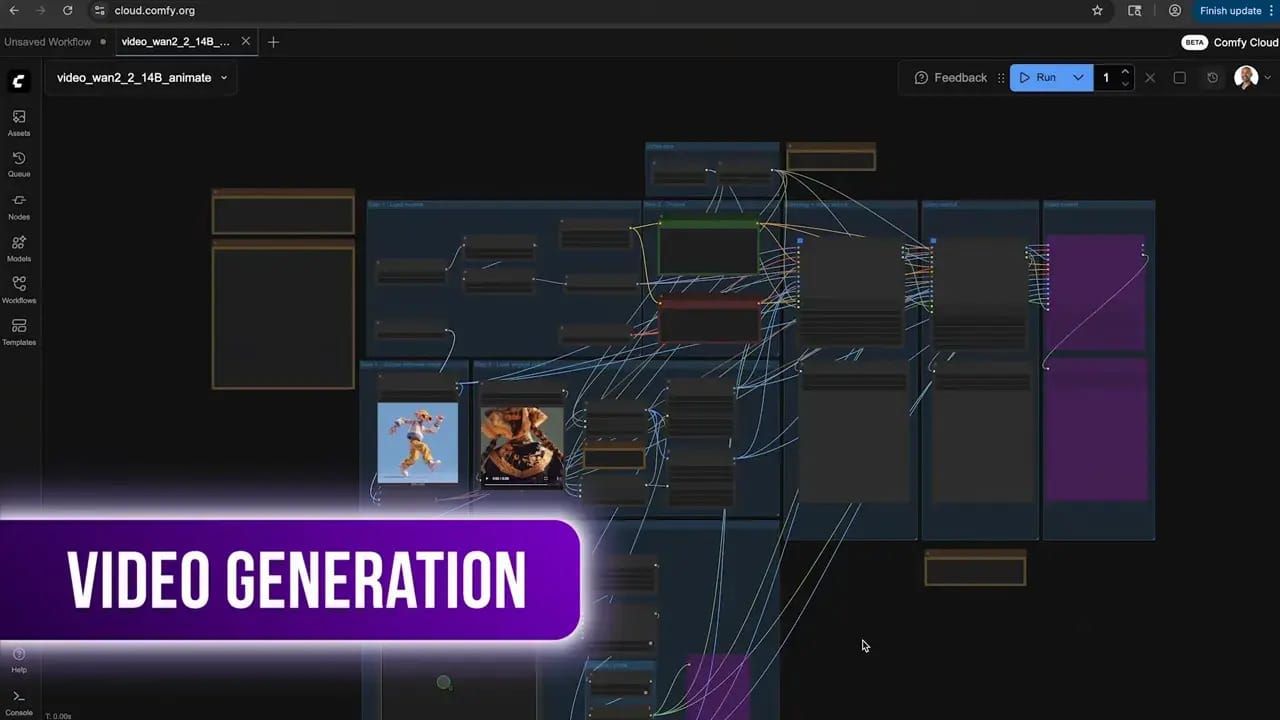

Animating with Wan 2.2: ComfyUI and fal.ai

For animation, Addy uses Wan 2.2 Animate (the 14B parameter model) with a "move" workflow to drive the frame-by-frame generation from the single reference image plus the recorded performance. ComfyUI acts as the local interface for model experimentation and clip-encoding. For faster throughput and access to beefier GPUs, fal.ai is used as a managed compute option.

Practical note: upload the single image and the source video, choose the animate/move workflow, and run. The more prep and reference you feed the model, the less it has to invent during animation—this directly affects output fidelity.

Post: grading, upscaling, and assembly in Resolve

AI-generated frames often come with a recognizable sheen—overly strong contrast, saturated colors, missing grain, and limited lens artifacts. Resolve is used to tame those tendencies and push the sequence into a cinematic palette. Addy recommends using Resolve’s upscaler to mitigate detail issues and then applying film-oriented corrections: reduce exaggerated saturation, reintroduce grain, add subtle vignetting, and correct lens distortion as needed.

Frame alignment is simplified by keeping the generated clips at the source frame rate (30 fps in this case). Dropping the AI clips back into the edit timeline at the same frame rate maintains frame-accurate sync with the original performance, which makes cutting and pacing straightforward.

Audio: quick scoring and voice in Suno

For music and vocal lines, Addy turned to Suno. The requirement was specific: a rugged, husky male voice to underscore the emotional moment. After a few iterations, the generated track fit the sequence and helped sell the tone. Suno can be a fast way to prototype musical directions and voice lines without waiting on composers or session singers.

Closing note

The assembled sequence demonstrates that AI tools can manage many parts of a short-form cinematic pipeline: look development, character dressing, environment creation, animation, and finishing. The clear takeaway is methodical: do the creative homework first—capture strong performance, produce multi-angle references, and iterate in small tests—then let the AI tooling do targeted work rather than entire guesswork.

This pipeline is a practical example of mixing human craft with AI assistance. It does not replace production discipline; it rewards it. For filmmakers and VFX artists, the path forward is iterative: test small, capture intentional performance, and let specialized tools handle specialized tasks. The result is not only a convincing short sequence but a repeatable process that teams can adapt to other scenes and genres.