Welcome to VP Land!

Three months ago, ByteDance's Seedance was barely on anyone's radar outside China. This week, the MPA is demanding it be shut down for copyright infringement. Meanwhile, Autodesk is suing Google over who gets to call their AI filmmaking tools "Flow."

What's moving:

Seedance goes viral, Hollywood goes defensive

Amazon builds AI Studio

Runway raises $315M for world models

Kling 3.0 merges storyboards with audio

Seedance 2.0 launches (with too good realism)

ByteDance officially launched Seedance 2.0, advancing its video generation model with a unified multimodal architecture. The upgrade jumps from Seedance 1.5's 5-second clips to 15-second multi-shot sequences with integrated stereo audio, and adds director-level editing controls that let creators reference and modify specific characters, scenes, and storylines.

Multimodal input. A single prompt can include up to 9 images, 3 video clips, and 3 audio clips plus text instructions. The model references composition, motion, camera movement, visual effects, and audio from input assets, breaking what ByteDance calls "material boundaries of conventional video generation."

Dual-channel audio and physical accuracy. Seedance 2.0 generates stereo audio with background music, ambient sound effects, and character voiceovers aligned to visual rhythm. ByteDance claims strong performance in complex motion scenes (multi-subject interactions, choreography, and precise timing), with physical accuracy that eliminates the glitches common in earlier AI video. We've tracked this evolution as consistency improves across the industry.

Video extension and targeted editing. Beyond generation, the model supports stable video extension (continuing shots based on new prompts) and targeted editing of specific clips, characters, or storylines. This positions it as both a creation and post-production tool, alongside other emerging editing capabilities.

Comparison to earlier versions. We covered Seedance 1.0 when it launched at roughly $0.50 per 5-second clip. Version 2.0 adds unified audio-video architecture, multimodal input, and director-level controls, a substantial leap in both capability and output length.

Hollywood backlash. The launch has come with baggage. Users quickly figured out the model could remix copyrighted material with "startling accuracy," generating deepfakes including a viral Brad Pitt vs. Tom Cruise fight scene and recreations of Avengers: Endgame and Friends.

The MPA called on ByteDance to "immediately cease its infringing activity," framing the launch as "disregarding well-established copyright law that protects the rights of creators and underpins millions of American jobs." SAG-AFTRA joined the condemnation, calling the videos "blatant infringement" and stating that "Seedance 2.0 disregards law, ethics, industry standards and basic principles of consent." And Disney sent a cease and desist letter to ByteDance on Friday, accusing the company of treating Star Wars, Marvel, and other Disney franchises as if they were free public domain assets.

SPONSOR MESSAGE

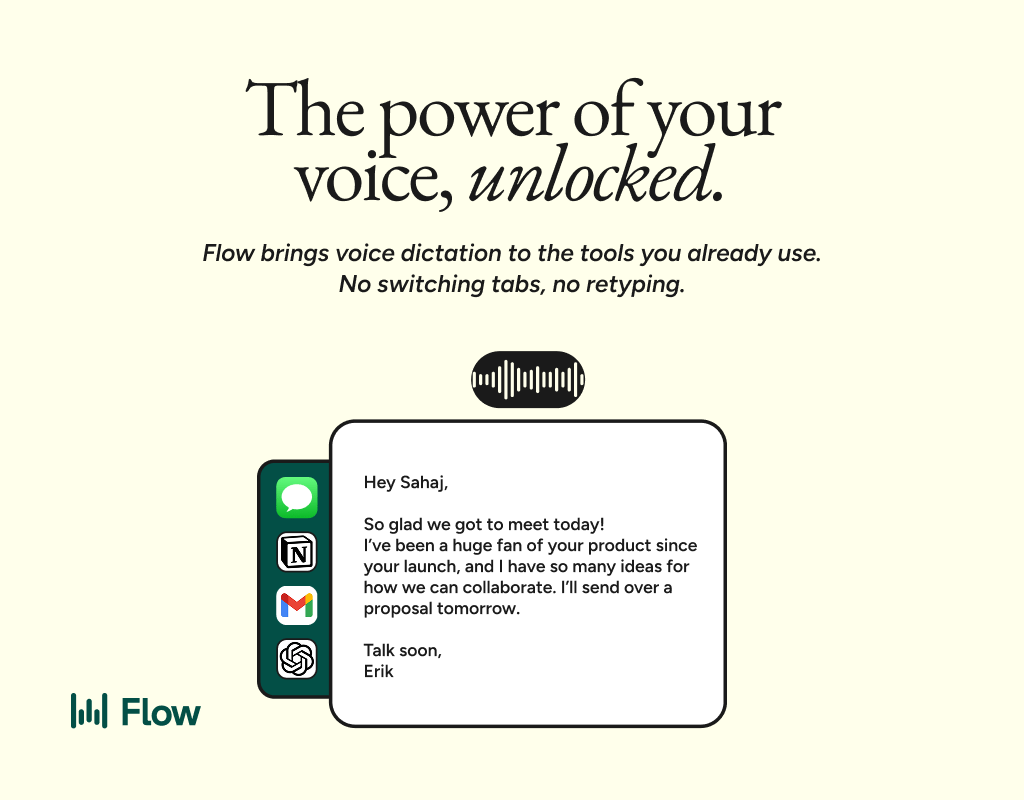

Better prompts. Better AI output.

AI gets smarter when your input is complete. Wispr Flow helps you think out loud and capture full context by voice, then turns that speech into a clean, structured prompt you can paste into ChatGPT, Claude, or any assistant. No more chopping up thoughts into typed paragraphs. Preserve constraints, examples, edge cases, and tone by speaking them once. The result is faster iteration, more precise outputs, and less time re-prompting. Try Wispr Flow for AI or see a 30-second demo.

Amazon MGM Studios Launches AI Production Tools

Amazon MGM Studios has launched an internal division called AI Studio, led by Vice President Albert Cheng, to build proprietary AI tools for film and TV production, according to Reuters. The unit operates under a "two pizza team" philosophy with a small group of product engineers and scientists, and plans to open a closed beta to select industry partners in March 2026.

The tools target "the last mile" between consumer AI and professional filmmaking. Cheng described the gap between what existing AI tools can produce and the granular control directors need for cinematic content. Capabilities include improving character consistency across shots, integrating with industry-standard creative tools, and giving directors finer control over AI-generated elements.

Three notable collaborators are testing early versions. Robert Stromberg (director of "Maleficent") and his company Secret City, Kunal Nayyar ("The Big Bang Theory") and Good Karma Productions, and former Pixar/ILM animator Colin Brady.

"House of David" Season 2 serves as proof of concept. Director Jon Erwin used a stack of over 15 AI tools (including Midjourney, Runway, Kling, Magnific, and Topaz) combined with traditional VFX to create 350+ AI-generated shots, up from 73 in Season 1. Scenes included 100,000 warriors charging into battle. We covered the production workflow in a previous edition.

Amazon frames this as expanding creative risk, not replacing workers. Cheng stated that "AI can accelerate, but it won't replace, the innovation and the unique aspects that [humans] bring." However, Amazon has cut roughly 30,000 corporate employees since October 2025, with CEO Andy Jassy citing AI-driven efficiencies.

Amazon expects to share initial results from the beta in May 2026.

The full story: Amazon MGM Studios Launches AI Production Tools

Runway Raises $315M for World Models

Runway raised $315 million in Series E funding at a $5.3 billion valuation, nearly doubling its previous valuation. According to TechCrunch, the round was led by General Atlantic and will fund development of what Runway calls its next generation of world models, which are AI systems that build internal representations of environments to predict future events.

World models as core strategy. According to TechCrunch, Runway released its first world model in December 2025 and now views the technology as central to applications across medicine, climate, energy, and robotics. We previously covered Runway's Act-Two performance capture system.

Expanding beyond media. While Runway built its customer base in media, entertainment, and advertising, the company is increasingly seeing adoption in gaming and robotics, a shift that signals ambitions well beyond filmmaking tools.

Competitive pressure. Runway's world model push comes as Fei-Fei Li's World Labs and Google DeepMind both released competing models.

The takeaway: Runway is repositioning itself as a world models company, not just a video generation tool. The funding and product roadmap suggest the company sees world models as the next frontier for AI capabilities—with applications far broader than creative tools.

More New Models: Kling 3.0 and Krea Realtime 14B

Two major video model releases this week point in opposite directions: Kling 3.0 doubles down on production features and API pricing, while Krea's open-source release democratizes real-time generation.

Kuaishou's Kling 3.0 merges 2.6 and O1 into a unified system with native multi-shot storyboarding, 4K resolution at 60fps, and integrated audio generation with multilingual dialogue. Pricing through fal.ai runs $0.17-$0.39 per second depending on tier, positioning it for professional workflows.

Krea AI's open-sourced Krea Realtime 14B takes a different path: 11 frames per second on a single NVIDIA B200 GPU, making it approximately 10x larger than comparable open-source video models. The real-time architecture supports interactive workflows with 1-second latency to first frame, distilling techniques from Wan 2.1 for frame-to-frame consistency.

Two Minute Papers highlights OmnimatteZero from NVIDIA Research, a tool that can remove objects from videos in real-time with a breakthrough methodology.

Stories, projects, and links that caught our attention from around the web:

🎥 Atomos unveiled the Shogun AV-19 at ISE 2026, a rack-mountable 19-inch 4K HDR monitor-recorder-switcher that records four isolated SDI feeds plus program output in ProRes, for $2,099

📚 OpenAI won an appeal reversing a court order that would have forced disclosure of privileged communications about deleting pirated book datasets, with the court ruling that denying willful infringement claims doesn't waive attorney-client privilege

🎬 Pirates of the Caribbean director Gore Verbinski warned that AI models face a "recursive training problem" where they ingest and regurgitate AI-generated content, arguing AI should solve problems humans cannot rather than replace human creativity

🎭 Google backed down in Disney's IP dispute, now blocking Disney character prompts in Gemini and Nano Banana after the Mouse House's December cease and desist letter claimed Google tools were a "virtual vending machine" for Disney IP

Addy and Joey dive into Google’s Genie world generation model, explore OpenClaw AI agents, and check out Kling 3.0, which combines the top features from multiple video models.

Read the show notes or watch the full episode.

Watch/Listen & Subscribe

👔 Open Job Posts

📆 Upcoming Events

March 8

40th Annual ASC Awards

Los Angeles, CA

🆕 March 9

GDC Festival of Gaming 2026

San Francisco, CA

🆕 March 12

SXSW 2026

Austin, Texas

🆕 March 24

REDUCATION Los Angeles - RED Digital Cinema

Burbank, CA

🆕 April 18

NAB Show 2026

Las Vegas, NV

View the full event calendar and submit your own events here.

Thanks for reading VP Land!

Thanks for reading VP Land!

Have a link to share or a story idea? Send it here.

Interested in reaching media industry professionals? Advertise with us.