The week’s biggest story started with a seemingly simple announcement: Sora 2 is out. In this episode of Denoised, hosts Addy and Joey break down Sora 2’s technical capabilities, the new TikTok-style app OpenAI shipped with it, and the thorny IP and likeness questions that followed.

What's new in Sora 2?

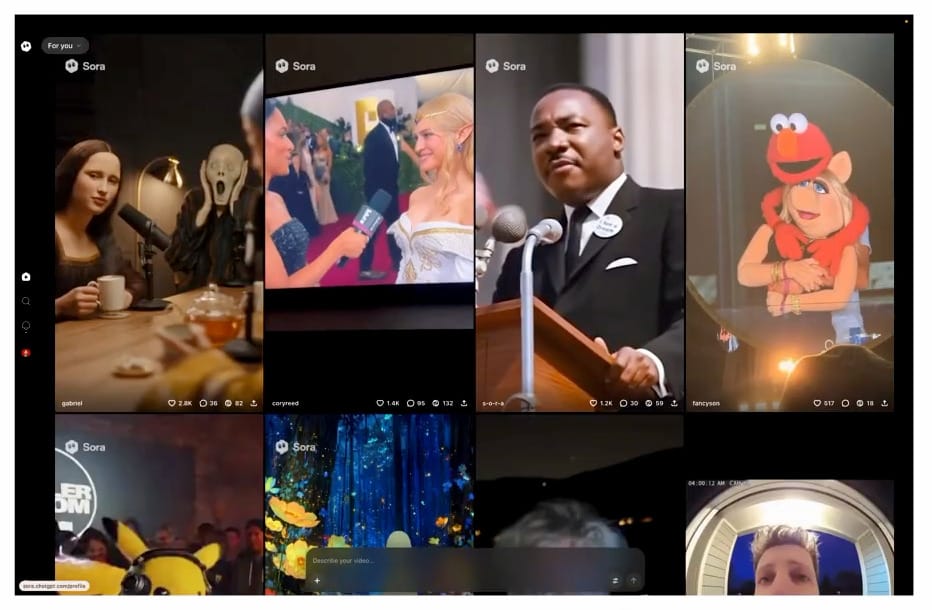

Sora 2 is a multimodal video model that produces photoreal video and audio, and OpenAI paired it with a vertical-video social app designed for short, meme-friendly clips. The model generates multi-shot sequences, sound design, and narration — essentially a short-form video creator driven by text or image prompts. It also supports a new Cameos workflow that scans a user’s face and ties that likeness to their account for later inclusion in generated scenes.

How Sora 2 actually behaves: multiple shots, story-first workflow

Unlike single-frame generative models, Sora 2 often returns multi-shot sequences that attempt to tell a short narrative. If prompted with a scenario — for example, “someone walking into Costco and finding a giant roll of toilet paper” — the system will produce a short, multi-shot sketch with sound, camera cuts, and even a simple script. That makes it less of a single-shot generator and more of an agentic storytelling tool for memes and short bits.

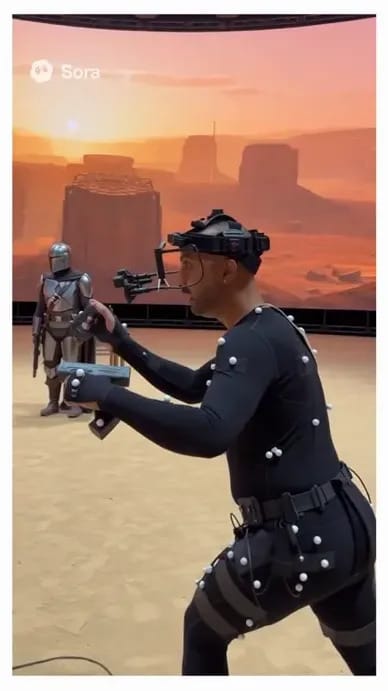

That said, the model’s approach is more aligned with faithful replication of training data than radical creative reimagining. Reviewers noted that Sora 2 tends to “regurgitate” specific visual motifs from the media it trained on to reach photorealism. In practice that can yield convincing digital humans and set dressing, but it also raises clear copyright flags.

Cameos: a practical approach to likeness control

One of Sora 2’s most discussed features is Cameos — a simple face-scan workflow that authenticates the user, binds that face to a username, and lets the account holder control who can insert that likeness into generated content. The scan process resembles Face ID calibration: users film a short selfie video, read a verification code, and look around so the model captures a full range of expressions and angles.

From a production standpoint, Cameos is notable for two reasons:

Quality of single-scan digital doubles: The Cameos pipeline produces remarkably coherent digital humans from a single brief scan, sufficient for short-form pieces and concept tests.

Permission control: The UI lets users set permission levels (private, friends-only, public), which is a practical way to avoid easy misuse of a living person’s likeness.

IP and copyright: OpenAI’s opt-out approach

OpenAI took a controversial route on copyrighted characters: the company will not proactively exclude specific IP from training or generation. Instead, copyright holders must opt out to prevent Sora from using their characters. That means, by default, Sora’s training corpus includes publicly available media — and the model can produce outputs that strongly resemble existing characters.

There are two connected problems here:

Likeness vs. character use: Cameos limits unauthorized use of a real person's likeness, but it does not stop the model from recreating fictional or copyrighted characters unless those rightsholders opt out.

Practical enforcement and accuracy: The guardrails are inconsistent. In testing, users found that some copyrighted characters were generated once, then blocked on subsequent attempts. The system reports violations generically, without explaining which element triggered the block, turning experimentation into a guessing game.

The implication for studios and creators is obvious: major rights holders will likely file takedowns or opt out entries quickly, but the default availability combined with inconsistent enforcement makes Sora a tempting—but legally risky—creativity playground for unauthorized pastiches.

Testing Sora 2: what comes out of the app

Practical tests conducted during the launch window highlight both the promise and the messiness. Examples included:

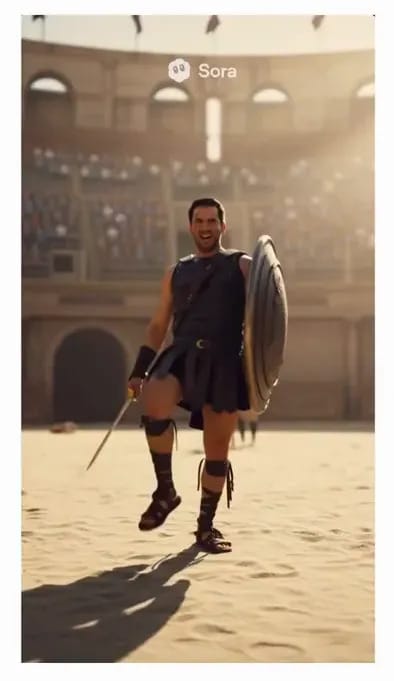

A convincing gladiator sequence that echoed a well-known historical epic but also contained shot-to-shot continuity issues.

Digital doubles of the hosts that, in some shots, looked strikingly like the people they scanned and in other shots featured odd facial proportions or lighting artifacts.

Combinations of IP across franchises — Wednesday Addams with Reacher on an Enterprise-like bridge — that at times rendered convincingly and sometimes failed mid-run.

Two operational notes emerged from those tests:

The public app instance appears to be a lower-tier compute profile (watermark, softer image quality, roughly 480p), while a separate Pro Sora 2 model claimed in OpenAI blog posts appears much higher fidelity and reserved for paid or pro users.

OpenAI is likely using the free rollout to collect real-world data: which keywords trigger similarity checks, what outputs get flagged, and where the guardrails fail. That data collection is standard, but it means creators are effectively beta testing a tool that will probably be monetized or gated later.

Regulatory and liability strategy: why a social app?

OpenAI released Sora 2 inside a social app framework. The hosts speculated the rationale: social platforms are typically protected by intermediary liability laws that shield hosting platforms from responsibility for user-posted content. By positioning Sora 2 as a social space where users post content, OpenAI may be aiming to leverage existing legal protections and reduce exposure.

Expect this to be tested in court or negotiated with major studios. The company can rely on DMCA-style notice-and-takedown mechanics, but the novel element is that Sora also provides the generative tool that creates the potentially infringing content — a legal gray area that invites litigation.

How Sora 2 fits into the generative video landscape

Generative video companies are diverging into two broad paths:

High-end production models aiming to assist filmmakers and studios (examples include Luma’s Ray family and Runway’s high-fidelity pipelines).

Personalized, social-scale generation aiming to populate feeds and enable mass user creativity (Sora 2’s current direction).

Sora 2 sits in the second bucket but can’t hide the fact that its human and physical modeling are of interest to production teams. For now, studios wanting fine-grained control and licensing clarity will likely continue using higher-tier tools with explicit licensing models. But Sora 2’s Cameos scanning and the “social-ready” distribution flow demonstrate the appetite for a future where audiences can place themselves into branded experiences — a capability studios may eventually monetize via partnerships.

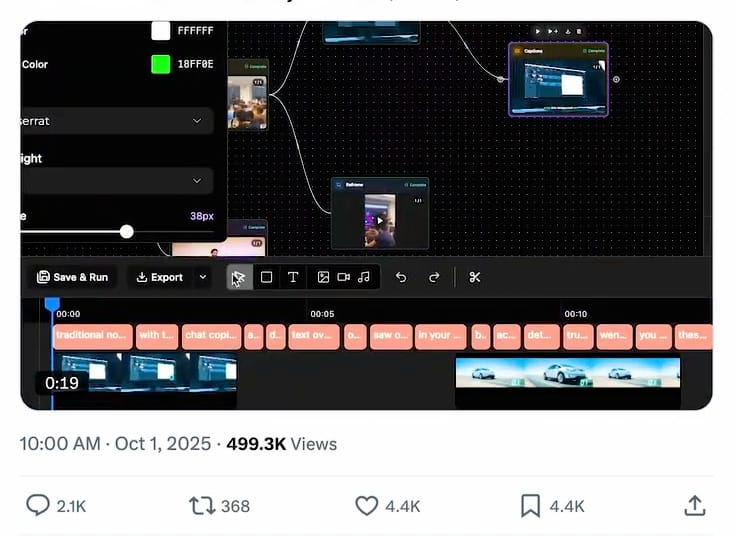

Mosaic: an agentic, node-based video editor worth noting

Beyond Sora 2, the episode highlighted Mosaic — an agentic video editor that blends node-based clip organization with prompt-driven editing. Conceptually, Mosaic presents an interactive canvas where clips are tiles that can be connected, annotated, and then assembled into a traditional timeline export (XML compatible with Premiere, Resolve, and Final Cut).

Why this matters: as generative assets become more common, new editing paradigms will appear. Node-based compositing has long been a staple in VFX (Fusion, Nuke, Resolve’s Fusion), and Mosaic’s approach suggests an editing future where AI-driven selection and structural edits happen before final timeline polishing.

More AI updates

Nano Banana: improved aspect ratio controls and a clearer image-output experience; this reduces manual upload order hacks and simplifies multi-reference workflows.

Seedance: added support for specifying first and last frames, and some models now accept audio files to drive performance. That audio-driven control is especially useful for animated shorts and lip-synced dialog assembly.

What filmmakers and producers should do next

Practical, short checklist for creative teams:

Experiment tactically: spend an hour in Sora 2 or similar tools to map the output quality and guardrail behavior, then archive your findings. Treat public app access as a test bed, not final delivery tech.

Inventory IP exposure: studios and brands should audit where their characters might appear in generative outputs and prepare opt-out or partnership strategies.

Integrate Cameos-style capture where appropriate: for marketing or experiential promos, a scanned avatar workflow can create viral user experiences if done with clear permissions and brand alignment.

Prepare legal frameworks: ask legal teams to draft rapid-response takedown and licensing offers — the next few months will likely be a mix of opt-outs and commercial deals.

Evaluate node/agentic editing options: Mosaic-style tools could streamline rough assembly in marketing workflows; keep an eye on XML export support so you can move from AI-assisted edits to finishing in Premiere/Resolve.

Final thoughts

Sora 2 is both a technical experiment and a policy experiment. It shows how close generative video is to usable photoreal results for short-form content and demonstrates a new product strategy: bundle a powerful model with a social surface to accelerate testing and distribution. For filmmakers, the takeaway is pragmatic optimism — the tools are arriving quickly, they will change workflows, and the industry will need to wrestle with IP, permissions, and new interfaces that sit somewhere between “generation” and “traditional production.”