In this week’s episode of Denoised, Addy breaks down a practical workflow for building a hybrid live-action short that pairs a real actor with a fully synthetic creature. The segment walks through multi-camera planning, environment and character generation, believable interactions, and the post work required to make AI-generated plates sit alongside real footage. Topics covered include multi-camera consistency, Nano Banana Pro for environments, Veo and Wan for interactions, Kling models for video interpolation, and DaVinci Resolve’s masking and grading tools.

Multi-Camera Consistency: Start with Something Real

Addy’s first rule is simple and practical: ground yourself in reality. That means building the scene around a physical, tactile element so every generated angle shares a concrete reference. In his short, a round table became the anchor. He shot plates with and without himself, then used that same table as the common scale and reflection reference across all synthetic generations.

Key techniques here include shooting the same setup from every camera angle intended for the edit, and preserving a physical prop that will register correctly when compositing. Reflections and contact points from the table help guide lighting decisions during generation and later corrections in compositing.

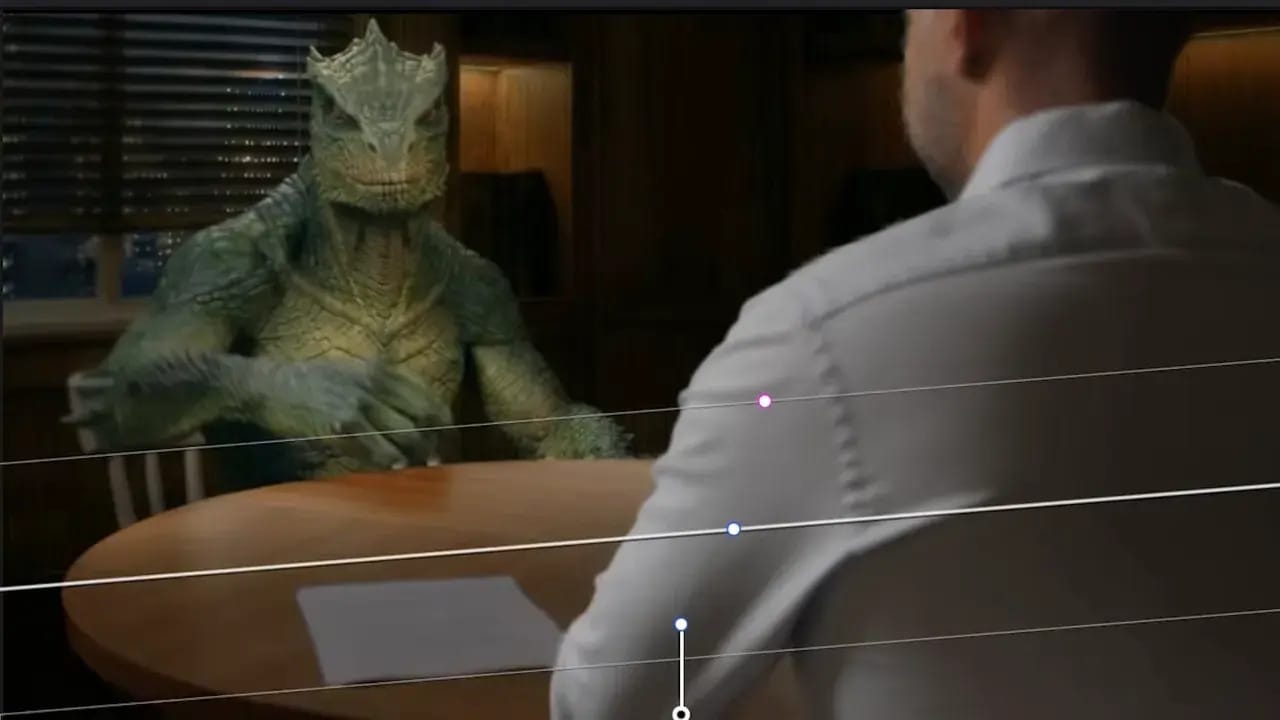

Creating Characters and Environment with Nano Banana Pro

Character design started with rapid iterations. Several creature concepts were tested—some too distracting, others promising—before settling on a job-seeking lizard. Nano Banana Pro handled the environment generation. Addy tried multiple moods: futuristic boardroom, traditional corporate, then landed on a moody, wood-paneled boardroom to match the sitcom-like broadcast look he wanted.

The challenge with image generation for environments is spatial consistency. Models can arbitrarily place objects or change implied depth between views. To fix that, Addy recreated the opposite viewpoint and manually adjusted the table and camera crop in Photoshop so scale and camera height matched the practical plates.

Generating Realistic Interactions: Veo and Wan

Interactions create the illusion that both characters exist in one space. Addy focused on a simple but effective moment: handing a sheet of paper. He generated a synthetic resume on the table and then attempted several models to animate the handoff. Results ranged from slow, awkward motion to an extra, spurious hand appearing in the frame.

The best compromise for photoreal motion in this case came from Wan 2.2 Animate, which produced a convincing first-frame generation without distorting the live actor. Where generations lagged or produced artefacts, motion speed was corrected in post, and tight crops removed unwanted elements.

Matching Perspective and Surface Detail

For over-the-shoulder and side-by-side frames, perspective matching is non-negotiable. Addy took screenshots from his practical plate and used them as exact references when prompting for new frames. Scale adjustments and seating placement were iterated until the creature sat believably at the table.

Before moving to video interpolation, each image plate was run through a skin enhancer. This step had two benefits: improving the live actor’s skin detail and adding texture to the creature’s scales, converting a blocky render into something with believable micro-detail.

Polishing and Compositing in DaVinci Resolve

Compositing was handled in DaVinci Resolve using a pragmatic toolset. The AI Magic Mask allowed a one-click separation of the actor from background plates. Addy layered his live shoulder and forearm in front of the generated creature plate, matched color and contrast, and applied slight defocus to his live plate so both elements sat at believable focal distances.

Spatial Perspective Control and Video Models: Kling 2.6 and Friends

Addy tested several video generation models—Kling 01, Kling 2.6, Veo 3.1, and Wan 2.5—looking for consistent motion and a controllable performance. Kling 2.6 performed best for certain camera movements, but still required careful prompting. The episode highlights a recurring constraint: current models offer limited control over spatial lighting and camera perspective as the viewpoint shifts.

That limitation shows up as inconsistent shadows, mismatched wall distance, and variations in background brightness between cuts. The workaround is careful plate design, multiple iterations, and planning for corrective grading work down the line.

Creative Camera Transitions with Interpolation

To spice up the edit, Addy experimented with a bullet-time-style transition between the side-by-side and over-the-shoulder shots. The trick was simple: supply a start frame and an end frame and let an interpolation model produce the in-between motion. The generated 5-second clip required speed and motion-blur fixes in Resolve to feel tighter and less floaty.

This demonstrates a useful pattern: use AI to produce a conceptual transitional shot, then refine timing, blur, and easing in your NLE rather than expecting the generator to deliver a finished plate at edit-ready quality.

Lighting Fixes and Final Color Work

Post was more than color grading. Addy used what he calls lighting fixes—simple shapes and gradients in Resolve’s color page, or “magic windows”—to control regional brightness and color casts. For example, the lizard’s torso had an overly yellow AI tint; a color selector and cooler temperature shifts corrected that.

Other fixes included darkening under-table regions and behind the actors where light wouldn’t realistically reach, isolating the creature when necessary, and using the depth map tool to subtly defocus backgrounds. These manual corrections are essential because video generations often arrive with mixed resolutions and inconsistent lighting across cuts.

Closing Thoughts

The workflow shows how current AI tools can speed creative exploration and lower the barrier for hybrid storytelling, while also highlighting where human craft still matters. Thoughtful set choices, careful plate preparation, and focused post work remain the difference between a clever experiment and a sequence that reads as part of a single, coherent scene.

For filmmakers and VFX artists, the key lesson is to combine strong production basics with AI experimentation. Use AI to generate iterations and ideas, but keep practical anchors and manual fixes in the pipeline. That balance is what produces polished, believable results today and offers a clear path as the tools continue to improve.