Veo 3.1 brings a familiar set of filmmaker-focused controls that make AI video generation feel closer to a practical production tool. In this episode of Denoised, hosts Addy and Joey break down Veo 3.1's key updates, examine Runway's new Apps approach to VFX tasks, walk through Eyeline's VChain research on reasoning for video generation, and look at NVIDIA's DGX Spark hardware arriving in the field.

Veo 3.1: incremental updates with outsized impact

Veo 3.1 is not a full generational leap, but the collection of quality improvements and workflow features adds meaningful control for filmmakers. The release focuses less on raw novelty and more on predictability, temporal consistency, and easier integration with production assets. Those priorities matter to anyone trying to use AI-generated footage as part of a larger edit or VFX pipeline.

Ingredients to video - use real assets as inputs

One of the most useful additions is the ingredients to video workflow. Instead of relying solely on a single starting frame or a fully text-driven generation, Veo supports multiple image inputs - people, props, locations - that the model will incorporate into the output. For filmmakers, that means more reliable character continuity and set consistency across generated clips.

Think of ingredients like a recipe. The images are the components, but the model still needs the ratios and direction that turn them into repeatable, coherent footage. That extra determinism reduces the guesswork when trying to match generated clips to principal photography or stock elements.

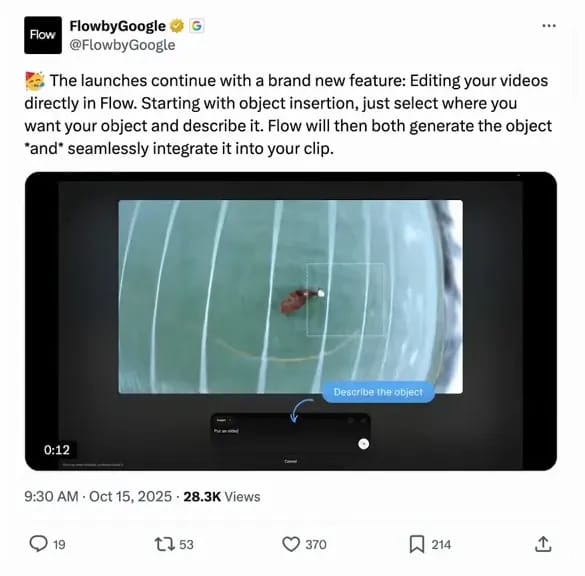

Annotation and insert tools in Flow - paint on the timeline

Flow, Google's web app portal for Veo, now includes a built-in annotation marker. The demo that circulated showed an editor drawing a square on an existing clip and instructing the model to add a person in that region. This is video inpainting and insertion built into the product interface rather than an ad hoc hack.

That level of spatial markup is the best kind of guidance a model can get. Instead of wrestling with verbose prompts, the editor communicates intent directly on the frame. For production this opens doors to faster turnaround on pickups, background replacements, or small insert shots that previously required full VFX passes.

Extend feature and temporal consistency

Another practical change is an extend feature that does more than copy the last frame. Veo 3.1 uses the last full second as the driving force when extending a clip. That extra temporal context reduces the jarring stutter and sudden content drift that sometimes occurs when a model has only a single frame for reference.

Temporal coherence is a core problem for video synthesis. Taking a broader context window when extending or looping clips preserves motion intent and reduces artifacts, which is important when generated footage needs to cut inline with real camera takes.

Where Veo sits in the ecosystem - Flow, API, and third-party apps

Flow provides access to many of Veo's most advanced UI-driven capabilities like the annotation marker and insert elements. Some of the ingredient-style inputs appear available via the API too, so integrators can pipeline image inputs into automated generation tasks. But the inpainting-style insert seems limited to Flow for now, which makes it the place to go when precise frame-level edits are needed.

Cost and throughput matter. For heavy usage, a Google AI Studio subscription can offer better credit economics than calling the API ad hoc. That was true when comparing earlier rates, and remains a reason to evaluate subscription versus API billing for production budgets and high-volume workflows.

How Sora and other models compare

Veo 3.1's updates sit alongside models such as Sora 2. Different tools have different strengths. Veo is being positioned as cinematic and filmmaker friendly, with controls that mirror familiar tools like selection and masking. Sora tends to push realistic detail in general-purpose generation and excels in different creative contexts.

Pricing disparities do exist, but once features, fidelity settings, audio, and duration are factored in, the cost per final usable second converges closer than headlines suggest. Some providers advertise lower entry prices but require subscription tiers or pro access for full quality, which affects real-world comparisons.

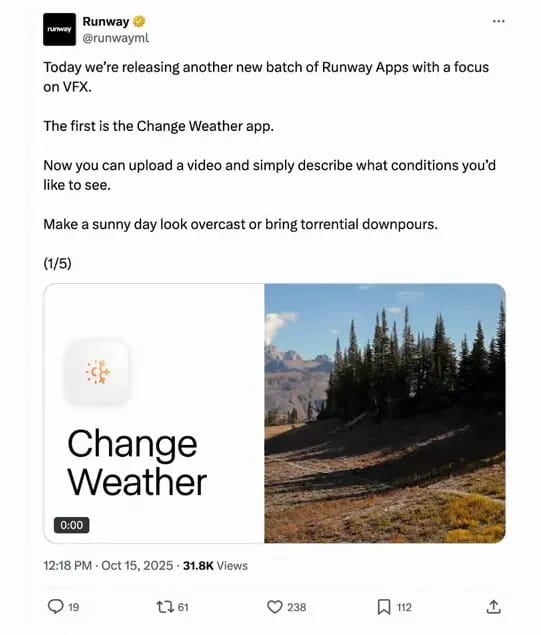

Runway Apps - focused workflows as presets

Runway introduced a new concept called Runway Apps. They resemble chatbot-style single-purpose apps: user-friendly, focused workflows that wrap the prompting, seeds, and generation settings into a repeatable preset. Instead of crafting prompts every time, a filmmaker chooses an app like "change the weather" or "relight scene" and supplies the input clips or images.

Under the hood those apps can work a few ways. They may store a custom set of seeds and parameters, create a tuned prompt template, or ship as a light adaptation of a model fine-tuned for a narrow task. For production use, presets save time and lower the bar for repeatability across many shots.

Useful presets include change background, time of day, relight, and color palette adjustments.

They can speed up the first-pass creative pass in editorial or prep shots for VFX handoff.

Repeated processing of the same clip through multiple presets can cause degradation, so consider a node-based stack where changes apply in a single pass when possible.

Runway's approach emphasizes usability. While other tools target power users with node graphs and API hooks, Runway Apps aim to solve a single problem with minimal input. For teams on tight schedules, that tradeoff between control and convenience can be valuable.

VChain - Eyeline's research on reasoning for video generation

Eyeline, the VFX and research arm associated with Netflix, published a paper titled VChain: Chain-of-Visual-Thought for Reasoning in Video Generation. The work is notable because Eyeline is closely connected to filmmakers and understands the level of control professional production requires.

At its core VChain adds a reasoning layer to video generation. Instead of a blind sampling from latent space that produces random motion, VChain uses a clip encoder to identify the elements needed for the scene and to populate a temporal latent space that describes when those elements should appear and how they should behave.

The paper uses simple analogies to explain the difference. If you prompt a model to show a falling feather and a rock, a naive generator might render both as generic falling objects. VChain instead generates an inference-time plan: the feather should drift, slow, and wobble before landing while the rock drops faster. As the model generates frames it checks those decisions and corrects mid-generation, looking backwards to ensure the motion matches the inferred narrative.

That look-back behavior is similar to recent advances in reasoning for language models and to the warp noise approach used in earlier video research. Warp noise shapes motion priors during denoising so the denoiser can consistently pick up intended motion. VChain applies a higher-level supervisory process that can be visualized as a "brain" monitoring and guiding frame synthesis.

For filmmakers this research points toward future tools that will let creators specify motion intent and behavior as discrete inputs. Imagine telling the model "feather sways left then right over two seconds" or sketching a motion path for a prop and having the model respect that path during generation. That level of temporal control will be essential for matching generated footage to complex edits and VFX pipelines.

NVIDIA DGX Spark - a compact powerhouse for on-site GPU work

The DGX Spark, nicknamed "the little gold box," has started shipping. The smallest configuration starts around four thousand dollars for a 4 TB device, which places it in an interesting price-performance spot for production teams who need localized GPU power without full desktop rigs.

ComfyUI recently announced native support for DGX Spark, making it straightforward to run models and workflows that might be too large for typical desktop GPUs. The DGX Spark uses NVIDIA's Blackwell architecture, which trades some clock speed for larger memory capacity. The net effect is the ability to load bigger models without splitting them across multiple GPUs and suffering clock domain slowdowns.

Practical advantages for production include:

Running larger models locally when cloud access is limited or costly.

Faster local encoding and decoding of high-resolution footage and codecs.

On-set visualization for virtual production tasks and Unreal Engine previews.

Benchmarks will tell the full story. A high-end desktop with a bleeding-edge RTX GPU may still outperform the DGX Spark on raw throughput thanks to clock rates and cooling. But when model memory is the constraint, the Spark's larger memory footprint removes a key bottleneck and lets teams run models that would otherwise require complex distributed setups.

Practical takeaways for filmmakers and producers

Across these stories, several clear production-focused themes emerge. They are the kind of insights that media teams can act on right away.

Favor tools that expose control rather than hide it. Ingredients inputs, annotation tools, and extend features make it easier to get predictable outputs that can cut into edits.

Use presets and apps for repeatable tasks. Runway apps and similar presets save time for single-purpose jobs like weather changes and relighting, but be mindful of iterative degradation when stacking multiple transforms.

Keep an eye on reasoning research. VChain-style approaches promise motion-level control. When these ideas reach production tools, they will change how teams approach animated motion matching and behavior-driven shots.

Evaluate local hardware for high-memory needs. Devices like the DGX Spark can unlock models that are too big for a single desktop GPU and may be cost-effective for teams that run many local inference jobs or need on-site rendering and encoding.

Consider economics across subscription and API options. For sustained generation tasks the price-per-credit math can favor platform subscriptions over API calls, depending on volume and feature access.

Final notes

The collective movement in these announcements is toward making AI video generation more predictable, usable, and integrated into film workflows. Veo 3.1 prioritizes temporal consistency and practical editing gestures. Runway is packaging usability into task-specific apps. Eyeline's research points toward reasoning-driven motion control. And the hardware layer is catching up with compact, high-memory boxes for production use.