Gaussian splats are quietly reshaping how filmmakers capture and replay real environments inside virtual production volumes. In this episode of Denoised, hosts Addy and Joey break down how 3D and 4D Gaussian splats fit into modern virtual production, why studios are pairing them with Unreal Engine pipelines, and how teams like ETC are turning 8-bit AI outputs into 16-bit EXR files for cinema-grade finishing.

How Gaussian splats plug into virtual production pipelines

Gaussian splats are best understood as one more tool in the production toolbox, not a full replacement for polygonal assets.

Several practical use cases emerged at Infinity Fest:

Fast environment capture for LED walls: scan a real location, ingest the splat into Unreal Engine via plugins, and push the result to a virtual production wall as a realistic, view-dependent backdrop.

Backup scans for pickups and episodic continuity: capture a practical set so later pickups or season renewals can use the scanned environment instead of rebuilding or rebooking locations.

Restricted or sacred locations: scan sites that cannot host production—museums, memorials, archaeological sites—and offer them as digitally reproducible sets without disturbing the physical place.

Companies like Volinga are shipping tools that bring ACES color pipelines into Gaussian splat workflows, which helps teams retain consistent color across on-set and virtual scenes. Plugins and capture devices such as XGRIDS PortalCam aim to simplify the end-to-end process so productions can scan and use splats without months of photogrammetry cleanup.

Limitations to plan for

There are tradeoffs. Gaussian splats are radiance field representations, so the lighting is effectively baked into the capture. That delivers highly believable reflections and glass behavior, but it also means the scene is not trivially relightable. For projects that require dynamic lighting changes, teams often combine splats with traditional geometry and textures, or they rebuild parts of a scene in polygonal form to retain flexible relighting.

Editing tools for splats are still maturing. The polygonal pipeline has decades of mature tools for asset manipulation; Gaussian splat tooling is catching up but remains more constrained. That is particularly important for VFX-heavy productions where per-shot control is non-negotiable.

What comes after splats: triangle Gaussian splats

Discussion at the panel touched on the next evolution: triangle Gaussian splats. The idea is to hybridize splats with triangle primitives—aligning splat data with GPU-friendly triangular math. GPUs are optimized for triangles, so switching the base primitive could improve parallel performance and memory access, while preserving the view-dependent radiance benefits of splats.

Triangle splats sound promising, but they represent ongoing research rather than a production-ready standard. Teams should watch SIGGRAPH and related research tracks for the next papers and tools.

A practical case study: ETC's upscaling pipeline to 16-bit EXR

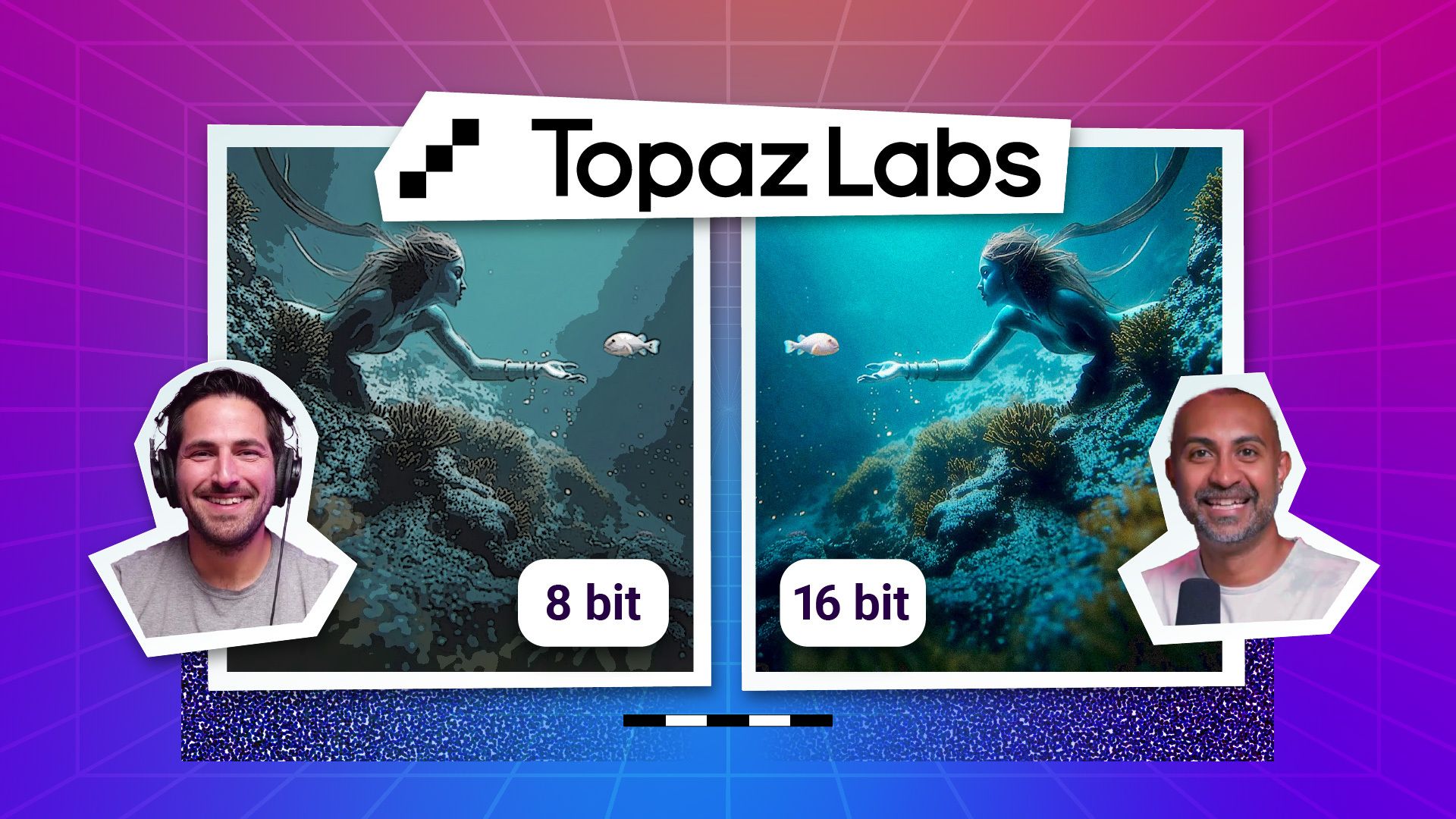

One of the most concrete production takeaways from Infinity Fest was a pipeline that converts AI-generated 8-bit outputs into 16-bit EXR files suitable for cinema finishing. ETC's short film pipeline (The Bends) demonstrates a pragmatic approach for productions that want to use generative outputs while retaining grading flexibility demanded by high-end colorists.

Their staged workflow used several Topaz models in sequence: a denoise pass to remove synthetic noise, an upres model to reconstruct higher spatial detail, and additional passes to clean artifacts. The result: footage that moved from an 8-bit-looking generation into a 16-bit EXR with extended latitude for grading, matching, and compositing.

Why bit depth matters

Bit depth determines the number of discrete tonal steps per color channel. An 8-bit image offers 256 levels, which is often adequate for display but can introduce banding and limit grading. Moving to 16-bit (or higher) expands the tonal steps dramatically, helping preserve detail in highlights and, crucially, in shadows. That is important for scenes where subtle gradients exist, such as deep-sea or nighttime shots. The ETC team showed waveform comparisons where a denser, smoother waveform replaced stepped banding after the Topaz processing chain.

One practical finishing technique is to add a controlled grain pass after upscaling. Grain serves as dithering and reduces perceived banding while also helping the image sit better in a cinema pipeline. Cinematographers and post supervisors on the project treated grain as part of the acquisition fingerprint—an intentional tool that increases photorealism and aids grading.

Tool roundup: what practitioners are using right now

Panel discussion and subsequent workflow anecdotes highlighted a split ecosystem where different models serve different lanes. Key observations for teams choosing tools:

Nano Banana is gaining traction for image editing and photo-based composites. It preserves backplates well and can insert people, cars, and objects with convincing reflections and lighting if the reference data is strong. Many creatives use it for precision inpainting and plate augmentation.

Seedream is favored for generating novel, creative worlds and stylized outputs. It is less constrained to photorealism and is useful for ideation and synthetic environments when a creative departure is desired.

Ideogram 3 is useful for logo and motif generation and can integrate with design pipelines to create consistent visual systems—fonts, motifs, and identity elements.

Framer AI can accelerate website mockups into functional skeletons, which is useful for teams building microsites or project pages around a show or technology demo.

Prompting tips and when to train a LoRA

Two practical prompting tips emerged. First, prompts phrased as "Show me..." can guide image-edit models to produce specific angle or composition variations. Second, longer, carefully boosted prompts—often generated by large language models and then refined—can yield more consistent results when pasted into generative image tools.

But when does a team invest in LoRA or fine-tuning? The panel framed LoRA as a consistency tool. Flux Kontext LoRAs require paired input-output examples—typically 20 to 30 paired images—to lock a transformation into a trigger token. That can be laborious because teams must craft the desired outputs beforehand. The tradeoff is repeatability at scale: when a production needs consistent stylization across hundreds of frames, a trained LoRA can reduce per-shot prompting variance and ensure reliable batch processing.

Practical takeaways for production teams

Consider Gaussian splats for environment capture when you need compact, view-dependent backplates for LED volumes or phone-based previsualization. They reduce file size and can speed up realtime playback.

Plan for relighting limits. If a scene must accept dramatically different lighting setups, mix splats with polygonal assets or rebuild critical elements for relighting.

Use a staged upscaling pipeline if you intend to finish AI-generated frames in a cinema environment. Denoise, upres, and convert to 16-bit EXR before color grading.

Use grain intentionally to reduce banding and give generative outputs a believable filmic texture.

Reserve LoRA training for projects that require strict, repeatable transformations at scale; use boosted prompts and reference images for smaller batches or one-off treatments.

Where to watch next and how to explore these tools

The convergence of volumetric capture, Gaussian splatting, and AI upscaling is creating practical new workflows for virtual production and post. Teams looking to experiment should pilot on non-critical assets first: scan a single room, ingest a splat into Unreal, and test a small Topaz-based upscaling chain to compare grading latitude. From there, plan tooling choices around the needs of relighting, editorial pickup, and pipeline automation.

Questions from the community help refine these workflows. Production teams and creatives are encouraged to compile specific use cases—pickup schedules, lighting constraints, and delivery formats—so they can evaluate whether splats, hybrid geometry, or a full photogrammetry build is the most efficient approach.

For busy creatives, the important lesson is this: Gaussian splats are not a silver bullet, but they provide a powerful middle ground—fast, compact, and visually convincing. Combined with targeted upscaling and finishing steps, generative outputs can now enter professional-grade color pipelines without sacrificing control over the final image.