AI is rapidly transforming filmmaking and visual effects, with new tools for video manipulation and 3D generation reshaping post-production and expanding creative possibilities.

This article dives deep into the latest developments in AI for filmmakers, highlighting key innovations such as Runway's Aleph, Adobe’s new Harmonize feature, Alibaba’s Wan 2.2 open-source model, and much more. These tools are not only transforming how artists work but also opening new avenues for creativity, efficiency, and accessibility in VFX and virtual production.

Runway Aleph: A New Era for Video Manipulation

Runway’s Aleph has been dominating industry conversations and social feeds as a revolutionary AI video tool. Unlike traditional AI models that generate videos from scratch, Aleph allows users to upload existing footage and manipulate it with simple text prompts. This approach aligns more closely with real-world production needs, which revolve around iterations, refinements, and creative changes rather than “set it and forget it” outputs.

Aleph excels in workflows traditionally dominated by green screen and complex VFX compositing. It supports relighting scenes, adding or removing objects (cars, people), changing weather conditions, and even altering clothing or hairstyles—all within existing video clips. In one demo, a woman walking in a scene has her hair, glasses, and outfit changed seamlessly, showcasing the tool’s versatility.

One of the most impressive aspects is that Aleph does not require green screen backgrounds. For example, a real-life stock footage of a boat was “joked” to be on a green screen set, but the shot was untouched. This flexibility hints at Aleph’s potential to disrupt conventional VFX pipelines.

Technical Limitations and Industry Impact

Currently, Aleph outputs videos capped at 5 seconds in 1080p resolution with some limitations on color space and color science fidelity. This means professional VFX artists may find it insufficient for high-end productions demanding EXR files or 16-bit color precision. However, these constraints are expected to improve rapidly as the technology matures.

The question of job displacement inevitably arises. While Aleph and similar tools will automate and speed up many traditional tasks, experts agree that gaps will remain—especially regarding continuity across shots, fine-tuning details, and maintaining artistic control. For example, replacing a shirt consistently across multiple shots or maintaining character continuity still presents challenges.

VFX artists are encouraged not to dismiss these tools but to embrace them early. Becoming adept at prompting and integrating AI outputs with traditional compositing software like Nuke could save significant time and open new creative possibilities. As Aleph evolves, it’s predicted that productions worldwide will adopt it incrementally, pushing the boundaries of what AI-assisted filmmaking can achieve.

Working Across Multiple AI Models for Consistency

One of the emerging challenges in AI filmmaking is managing different models to achieve consistent results. Since each AI model has unique strengths and weaknesses, creators often experiment with various tools at the shot level. However, shifting between models can cause visual inconsistencies, such as differing film stock looks or texture variations.

For longer projects, a strategic approach involves selecting a primary model for base work and then using secondary models for style transfer or animation while ensuring scene continuity. This workflow mirrors traditional filmmaking’s attention to consistency in look, feel, and texture across scenes and shots.

Staying organized and developing expertise in which AI tools suit specific tasks will be a critical skill for filmmakers navigating this evolving landscape.

Luma AI’s Modify with Instructions: Natural Language Video Editing

Luma AI recently upgraded its video editing capabilities with “Modify with Instructions,” a feature that allows users to upload videos and edit them using conversational commands. This is similar in spirit to Runway Aleph but adds its unique nuances.

For instance, Luma can remove objects like fire hydrants from a scene or replace backgrounds with ease. One notable demonstration showed a user removing a fire hydrant from a shot of John Finger sitting on it, showcasing the dramatic time savings over traditional VFX methods.

Luma’s approach highlights a broader industry shift where tasks that once took days or weeks of manual rotoscoping and compositing can now be accomplished with a simple text prompt. While some concerns exist about facial distortions or slight quality issues, the overall impact is transformative.

Competition between tools like Luma and Aleph is beneficial, driving rapid improvements and offering filmmakers diverse options depending on their project needs.

Moonvalley, although less widely known, offers features that overlap with Aleph and Luma, such as video style transfer, pose transfer, and camera angle shifting. Their ability to alter perspectives within a video opens exciting possibilities for creative storytelling and virtual production.

While still emerging, Moonvalley’s tools contribute to the growing ecosystem of AI-powered solutions for filmmakers looking to enhance their footage with minimal manual effort.

Alibaba’s Wan 2.2: Open Source Video Generation at Your Fingertips

Wan 2.2, released by Alibaba, is a sleeper hit in the open-source AI video generation space. It offers VO3-level realistic quality and can be run on powerful local computers for free, making it accessible to a broad range of users.

Wan 2.2 includes three models: a text-to-video model with 14 billion parameters, an image-to-video model also with 14 billion parameters, and a unified video generation model with 5 billion parameters optimized for local use. Although generating a 5-second 720p clip can take around 44 minutes on a high-end GPU, this capability is remarkable for an open-source offering.

The model architecture divides denoising into “high noise” and “low noise” stages, where early steps involve creative generation and later steps focus on refinement and detail. This “mixture of experts” design improves output quality and efficiency, signaling a trend toward more modular and specialized AI systems.

Wan 2.2’s cinematic understanding—covering lighting, camera movement, and composition—makes it especially relevant for filmmakers and VFX professionals seeking open-source alternatives to commercial tools.

Ideogram’s One-Shot Character Generator

Ideogram AI, known for its superior text-to-image capabilities, has introduced a new feature called Ideogram Character. This tool generates realistic characters from a single uploaded image, integrating them into scenes with impressive fidelity.

While most demos focus on portrait-style, front-facing images, early tests suggest strong results even in more complex scenarios involving profile views or occluded faces. This one-shot generation capability simplifies character creation for filmmakers and digital artists, streamlining workflows that previously required multiple images or complex rigging.

Adobe Photoshop’s Harmonize: One-Click Scene Integration

Adobe has re-entered the AI space with an outstanding new Photoshop beta feature called Harmonize. This tool automatically blends cut-out objects into scenes, matching lighting, shadows, color, and texture to create seamless composites.

For example, placing a coffee cup on a table and hitting Harmonize results in a natural-looking integration that adjusts for the scene’s perspective and lighting conditions. This feature is invaluable for concept artists, previs creators, and product photographers who require fast, realistic composites without manual adjustments.

Harmonize is powered by Adobe Firefly’s in-house AI model, ensuring commercial safety and integration within Adobe’s ecosystem. While Firefly itself is evolving, Harmonize represents a user-friendly AI tool that hides complexity under the hood, making AI accessible to mainstream Photoshop users.

This feature’s impact is comparable to Photoshop’s historic Liquify tool, which transformed image manipulation a decade ago. Harmonize promises a similar revolution in compositing and product visualization.

Generative Upscale: Enhancing Resolution with AI

Adobe has also introduced a generative upscale beta, which uses AI to enhance image resolution beyond traditional interpolation methods. Unlike classic upscaling that mathematically fills in pixels, generative upscale leverages AI to reconstruct details, reduce noise, and improve clarity.

However, this process can sometimes lead to overly smooth or “plasticky” results, especially with archival footage. The presence of some grain or noise is essential to maintain a natural and realistic look, much like how filmmakers reintroduce film grain for texture in digital images.

This nuanced balance between clarity and authenticity is critical in post-production, echoing industry practices such as Netflix’s encoding strategies that remove grain during compression but add it back during streaming.

New High Frame Rate Cameras: ARRI ALEXA 35 Xtreme and More

On the hardware side, ARRI recently unveiled the ALEXA 35 Xtreme, capable of shooting 4K at 240 frames per second. This high-speed capability rivals expensive Phantom cameras, historically used for scientific and cinematic slow-motion capture.

Such advancements provide filmmakers with incredible image quality and frame rates, offering a strong counterpoint to AI-generated content. Shooting real, high-quality footage remains essential, especially as imaging quality forms the foundation for AI processing and VFX work.

Hunyuan 3D World Model: AI-Powered 3D Environment Generation

Hunyuan’s 3D World Model 1.0 introduces a new frontier in AI-driven 3D space creation. Users can generate immersive 3D panoramas and environments from text or images, complete with meshes and textures exportable to Unreal Engine or Blender.

While still early-stage and primarily suited for virtual production or previs, this tool offers exciting potential for quickly creating consistent and explorable 3D backdrops. However, it’s unlikely to replace meticulously designed game maps or high-end 3D assets, which require precise control over geometry and optimization.

Virtual Production Meets AI: The Future of LED Volumes?

There’s ongoing debate about how AI tools like Aleph will interact with virtual production techniques, particularly LED volumes. Currently, Aleph’s 5-second clip limit and resolution constraints mean it cannot fully replace LED volume workflows, which provide real-time, high-resolution backgrounds actors can interact with on set.

However, hybrid approaches may emerge, combining simpler LED or projector setups with AI-driven background enhancements. This could reduce pre-production costs while maintaining some in-camera visualization benefits, offering a middle ground between green screen and full LED volume setups.

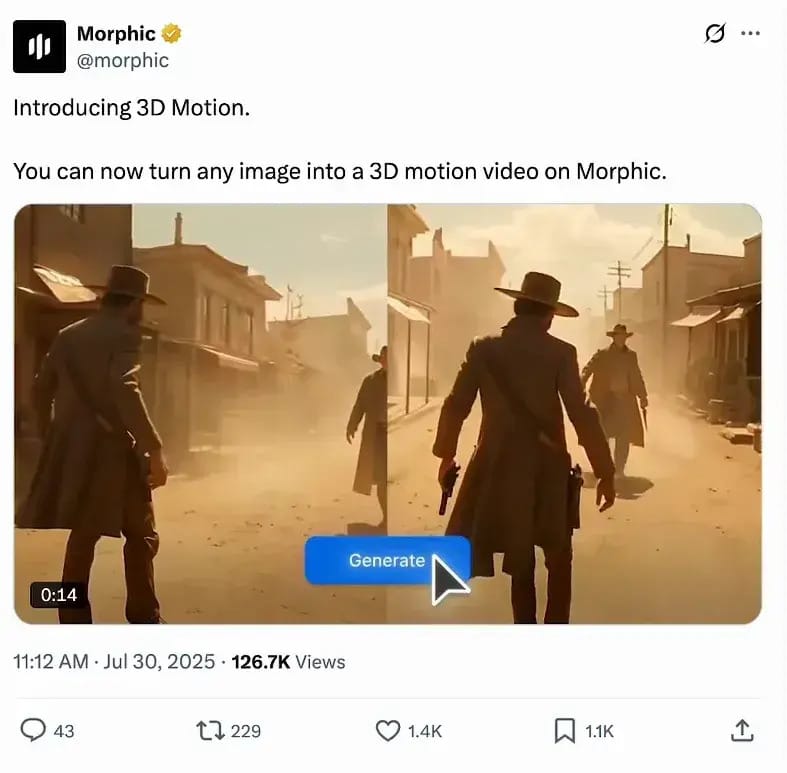

Morphic 3D Motion: Adding Depth and Movement to Images

Morphic’s new 3D motion tool builds on AI’s ability to generate and manipulate 3D spaces from single images. By extrapolating depth maps and generating 3D models, users can add camera movements and animations that bring static images to life.

This tool shares similarities with Moon Valley’s pose and motion transfer features and represents a growing trend toward AI-enhanced creative control over 2D and 3D content.

Scenario’s Text-to-3D Generation in Parts: A Game-Changer for CG Production

Scenario has introduced a breakthrough AI model called PartCrafter that intelligently splits generated 3D objects into modular parts with clean geometry and structure. Unlike previous text-to-3D tools that output inseparable meshes, Scenario’s model creates separate, editable components, enabling easier retopology, rigging, and modification.

This development is particularly significant for industrial applications and CG production workflows requiring CAD-level precision and modularity. For example, a Lego character generated by Scenario can be exploded into individual pieces, each accurately modeled and usable for further artistic or technical work.

Google NotebookLM: AI-Powered Learning and Content Summarization

Google’s NotebookLM is a versatile AI tool designed to help users learn and organize information efficiently. By ingesting documents, videos, and recordings, it creates an interactive notebook capable of answering questions, generating mind maps, lesson plans, and even fake podcast episodes summarizing complex topics.

The latest update adds video generation, enabling users to produce video presentations resembling PowerPoint slideshows that explain notebook content. Though rudimentary now, this feature hints at future integrations with advanced video models like VO3, potentially automating educational content creation.

Shotbuddy: Streamlining AI Video Version Control

As AI generates vast quantities of images and videos, managing versions and iterations becomes a logistical challenge. Shotbuddy, an open-source tool developed by Albert Boazan, addresses this by organizing AI-generated assets locally with shot IDs, version folders, and automatic file renaming.

Targeted specifically at AI filmmaking workflows, Shotbuddy helps creators maintain clarity and order amidst the chaos of multiple versions, making it easier to track progress and collaborate effectively.

Conclusion: Embracing the AI Revolution in Filmmaking

The rapid flood of AI tools like Runway Aleph, Luma AI, Wan 2.2, and Adobe Harmonize signals a seismic shift in how filmmakers and VFX artists approach content creation. While technical limitations and quality concerns remain, these technologies enable creative professionals to work faster, iterate more freely, and realize visions previously constrained by time and budget.

Adapting to this new landscape requires openness to experimentation, mastering prompting techniques, and integrating AI outputs with traditional workflows. The future belongs to those who embrace these tools early, blending human creativity with AI efficiency to push the boundaries of storytelling and visual artistry.

As AI continues to evolve, the filmmaking community stands on the cusp of a creative renaissance—one where imagination is the only limit.